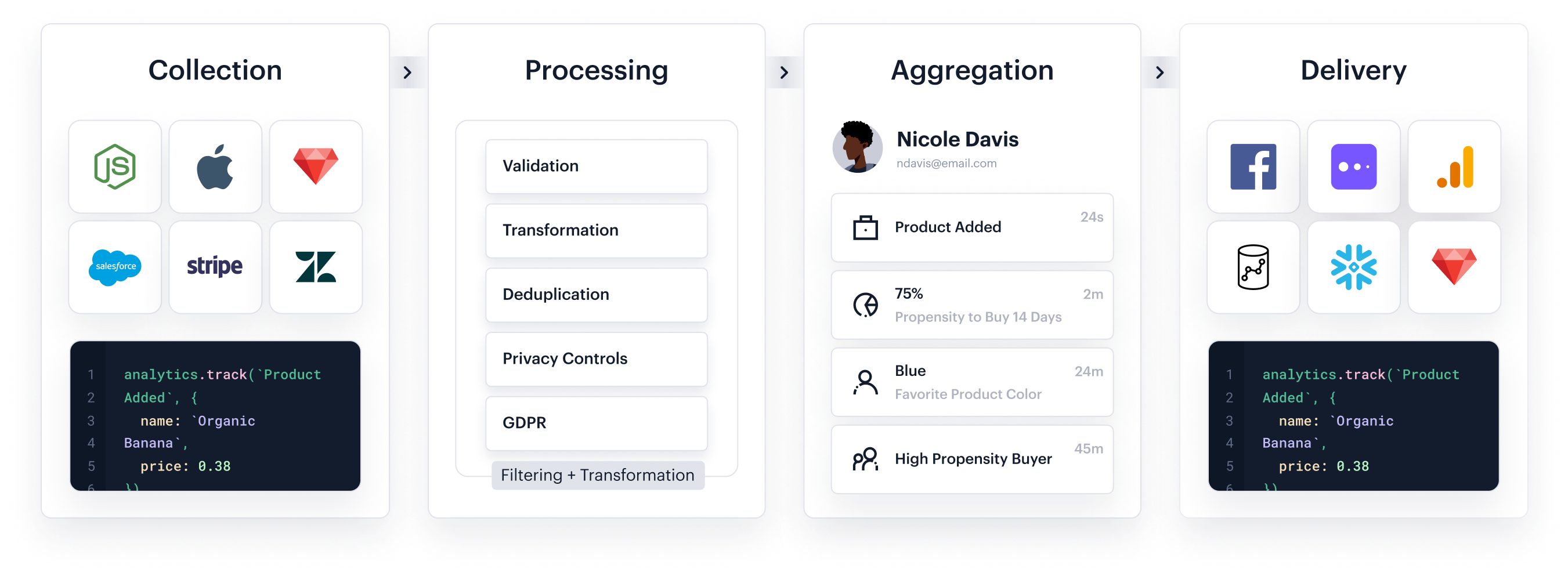

Collection

Customer data lives everywhere: your website, your mobile apps, and internal tools.

That’s why collecting and processing all of it is a tricky problem. Segment has built libraries, automatic sources, and functions to collect data from anywhere—hundreds of thousands of times per second.

We’ve carefully designed each of these areas to ensure they’re:

- Performant (batching, async, real-time, off-page)

- Reliable (cross-platform, handle rate-limits, retries)

- Easy (setup with a few clicks, elegant, modern API)

Here’s how we do it.

Collection

Libraries

You need to collect customer data from your website and mobile apps tens of thousands of times per second. It should never crash and always work reliably. Here’s how we do it…

Collection

Cloud Sources

To fully understand your users, you’ll have to mirror the databases of your favorite SaaS tools (Adwords, Stripe, Salesforce, and more) into your systems of record. Here’s how we do it…

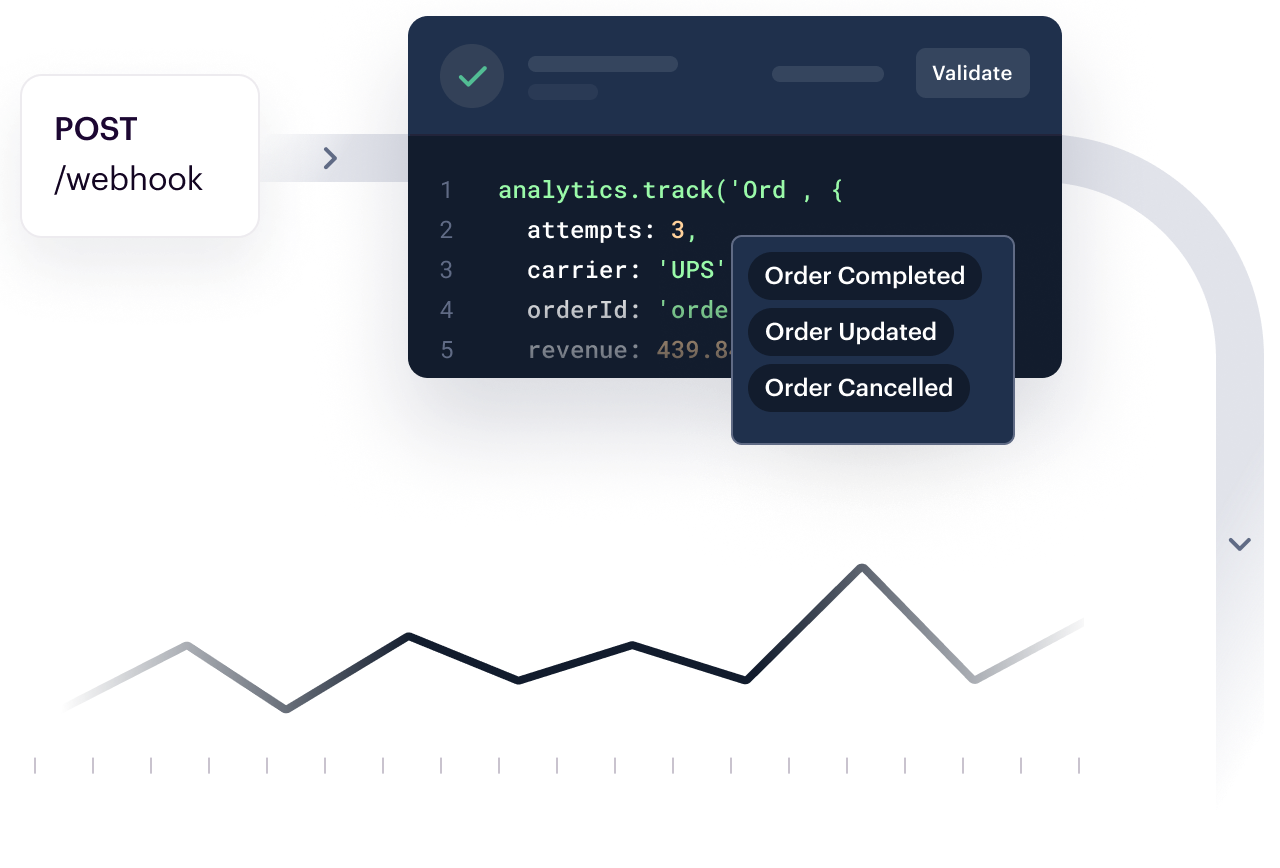

Collection

Source Functions

Often, you’ll want to pull in data from arbitrary web services or internal sources. They’ll have webhooks for triggering new data entries, but no standardized way of getting that data. Here’s how we do it…

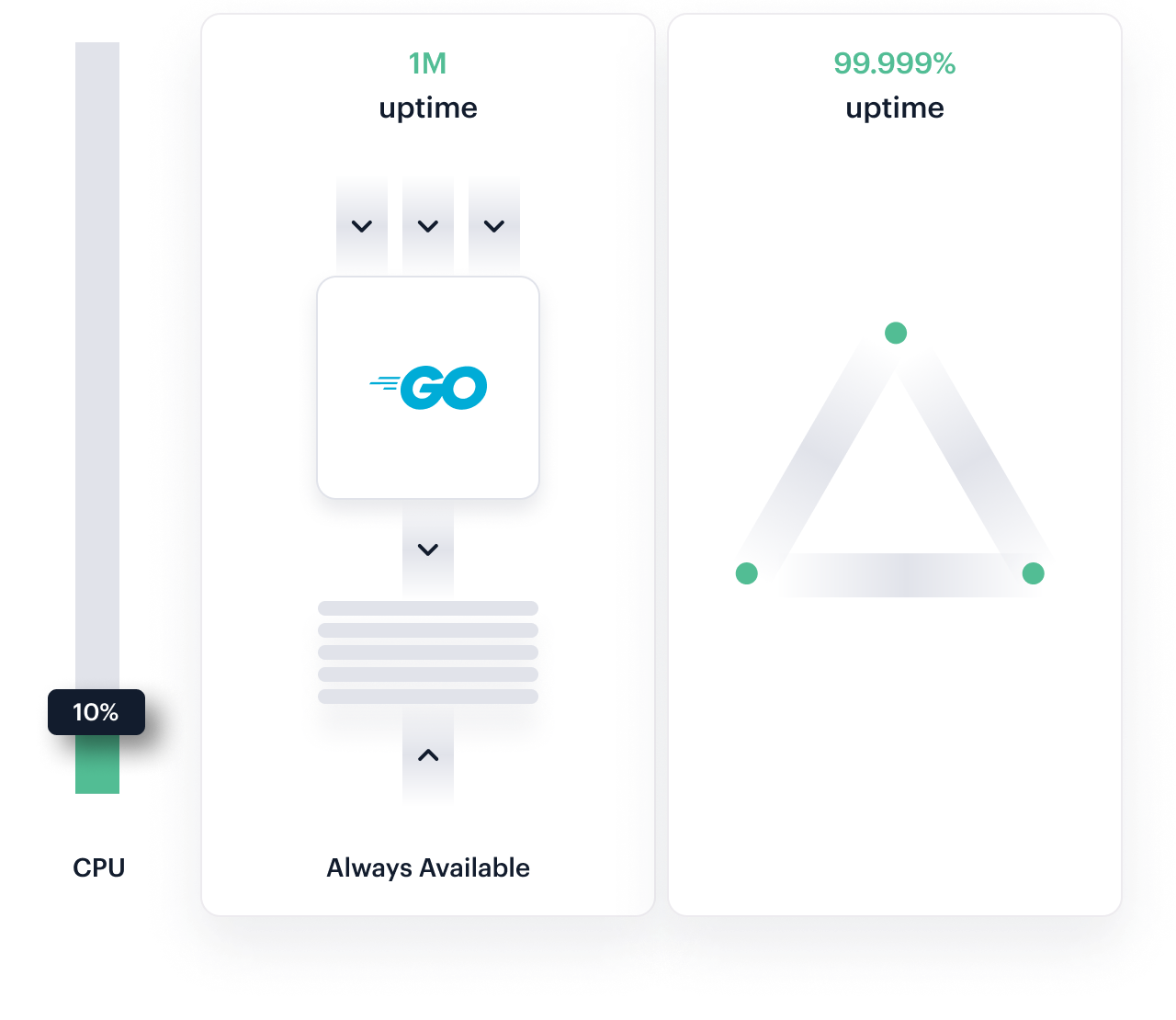

Collection

API

To collect all this data, you need an API that never goes down. We’ve ensured that our high-performance Go servers are always available to accept new data. With a 30ms response time, and “six nines” of availability, we’re collecting 1M rps, handling spikes of 3-5x in minutes.

Processing

Data can be messy. As anyone who has dealt with third-party APIs, JSON blobs, and semi-structured text knows that only 20-30% of your time is spent driving insights. Most of your time is spent cleaning the data you already have.

At minimum, you’ll want to make sure your data infrastructure can:

- Handle GDPR suppressions across millions of users

- Validate and enforces arbitrary inputs

- Allow you to transform and format individual events

- Deduplicate retried requests

Here’s how we do it.

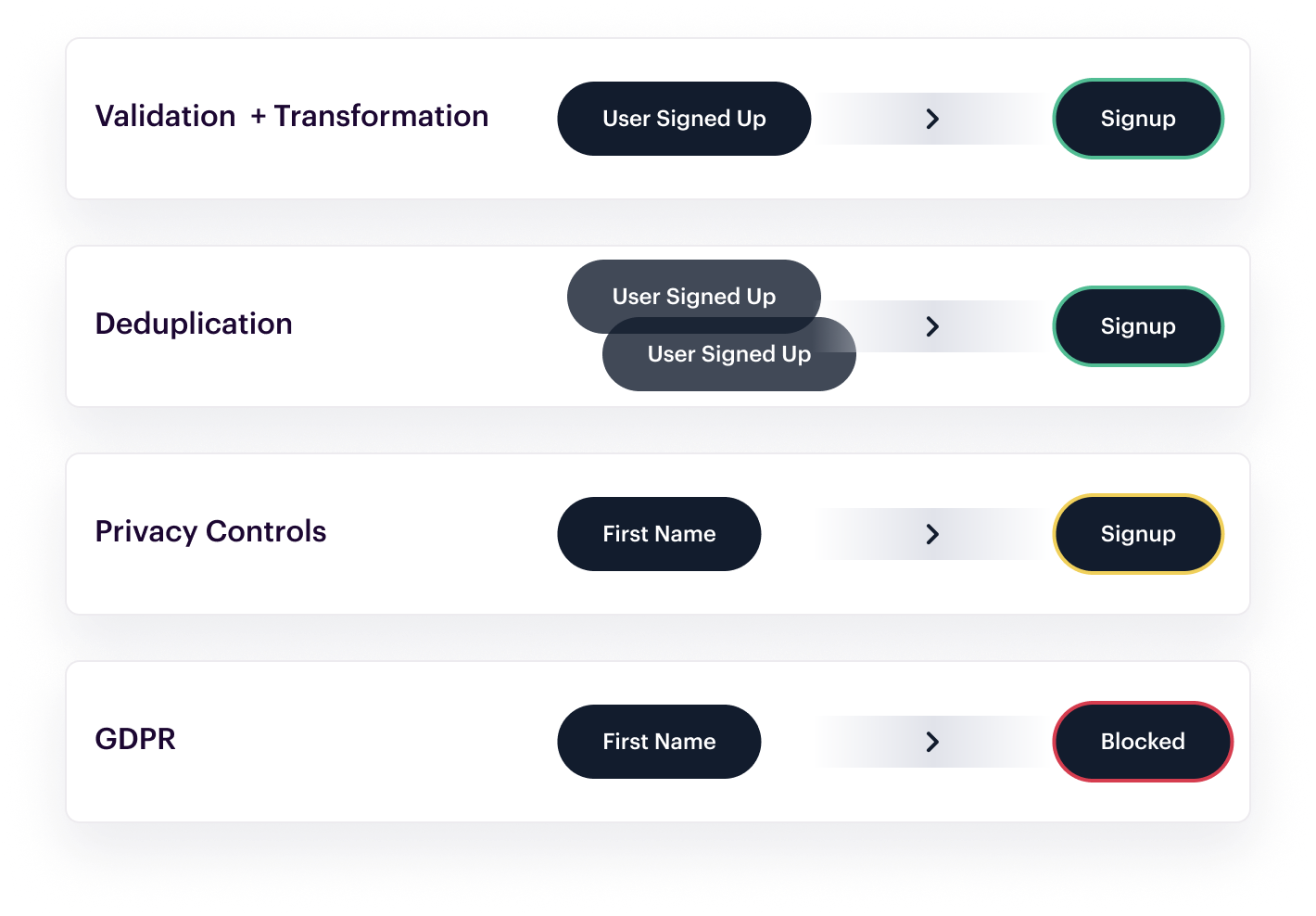

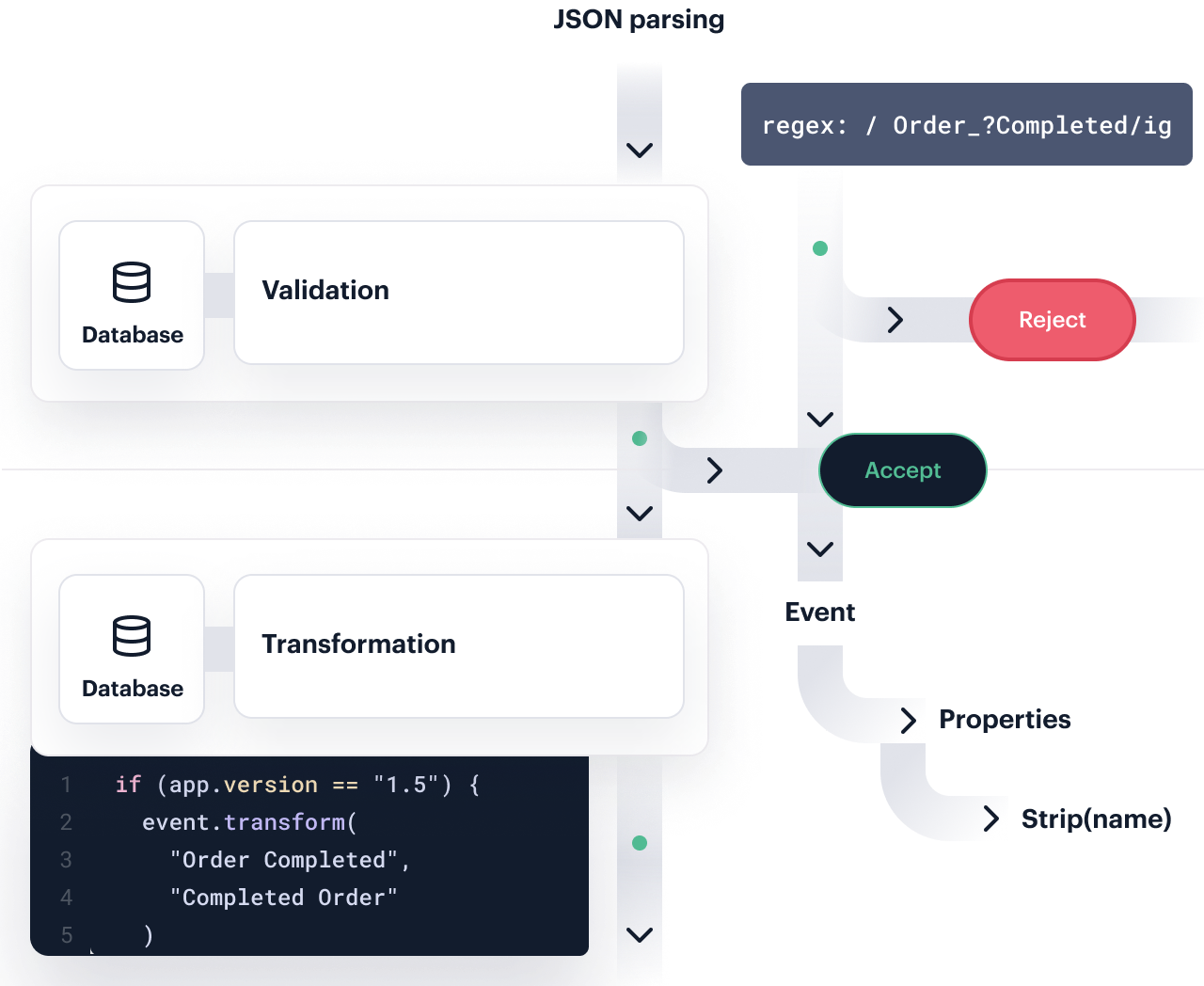

Processing

Validation and Transformation

Sometimes the data you’re collecting isn’t always in the format you want. It’s important that anyone—PMs, marketers, engineers—be able to help clean the data you’re collecting to match your needs.

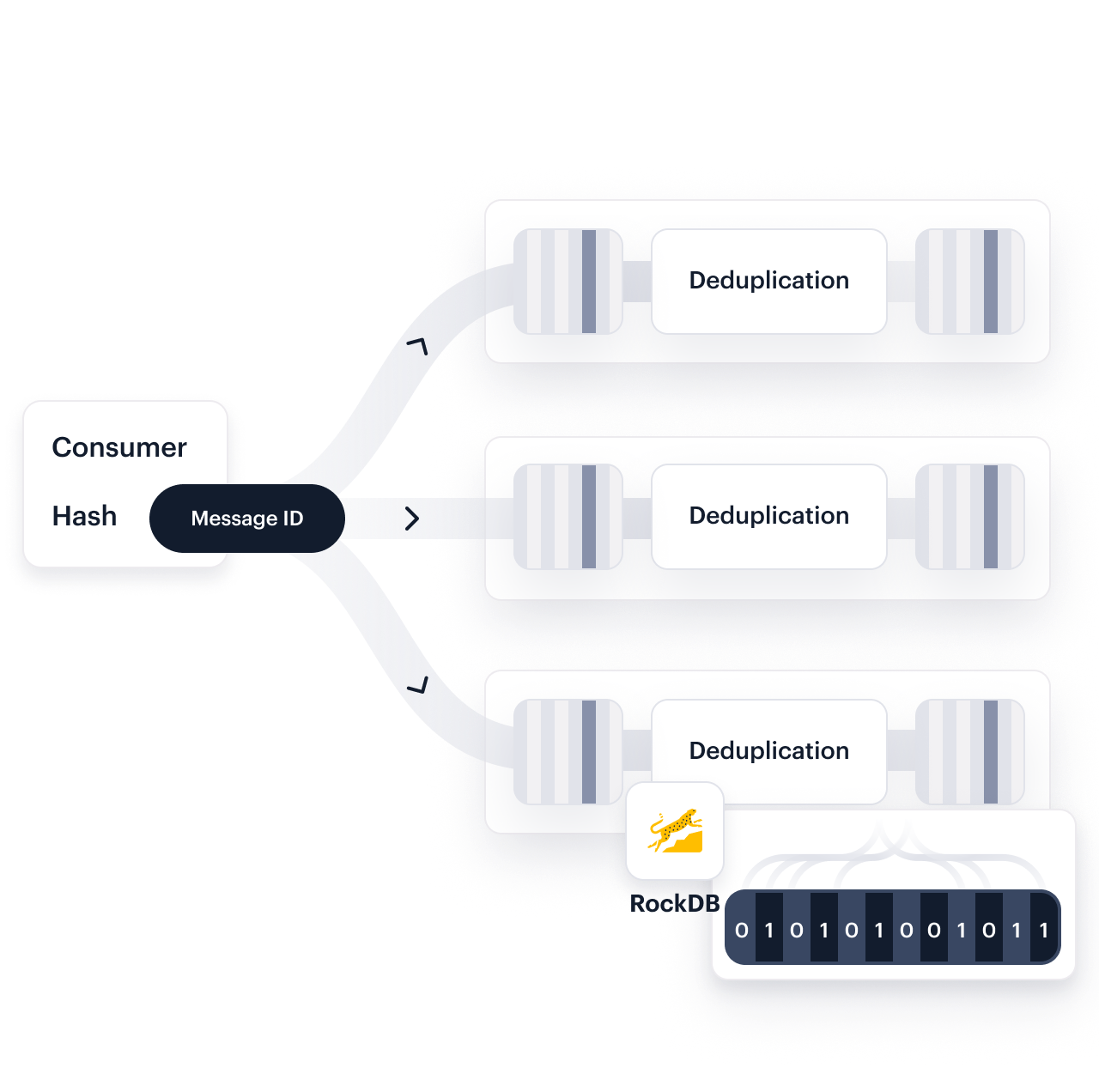

Processing

Deduplication

What’s worse than missing data? Duplicated data. It’s impossible to trust your analysis if events show up 2, 3, or more times. That’s why we’ve invested heavily in our Deduplication Infrastructure. It reduces duplicate data by 0.6% over a 30d window.

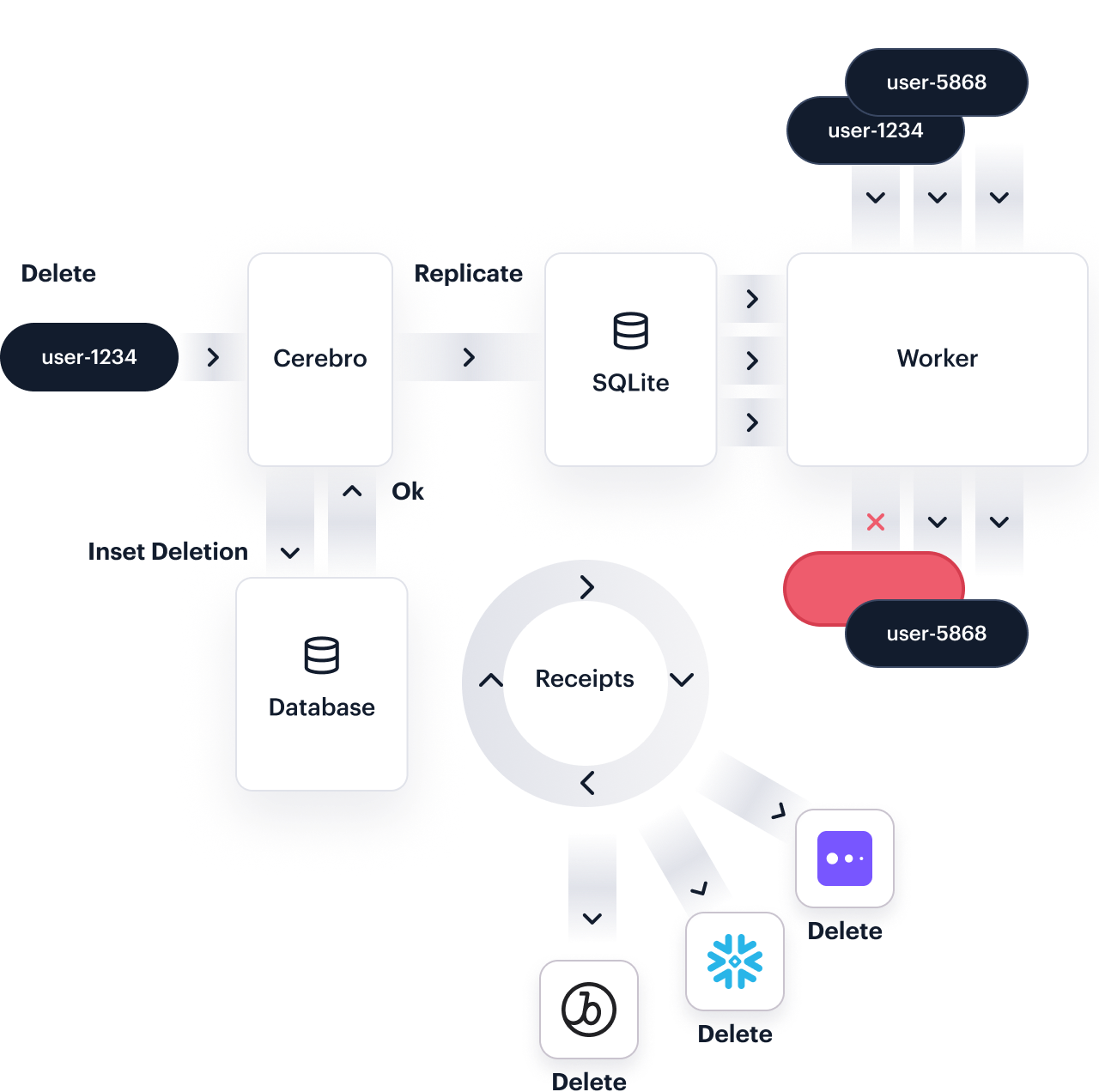

Processing

GDPR Suppression and Deletion

If you’re working with data today, you must be compliant with the GDPR and CCPA. Both grant individual users the ability to request that their data be deleted or suppressed. It’s sort of like finding 100k needles in 100 billion haystacks—but here’s how we do it.

Aggregation

Individual events don’t tell the full story of a user. For that, you need to combine that data all into the notion of a single profile.

This is where most systems hit their scaling limits. Not only do they need to process tens of thousands of events per second, but they need to route that data to a single partition. This requires:

- Querying a single profile in real-time in milliseconds

- Scanning across millions of user histories to find a small group

Here’s how we do it.

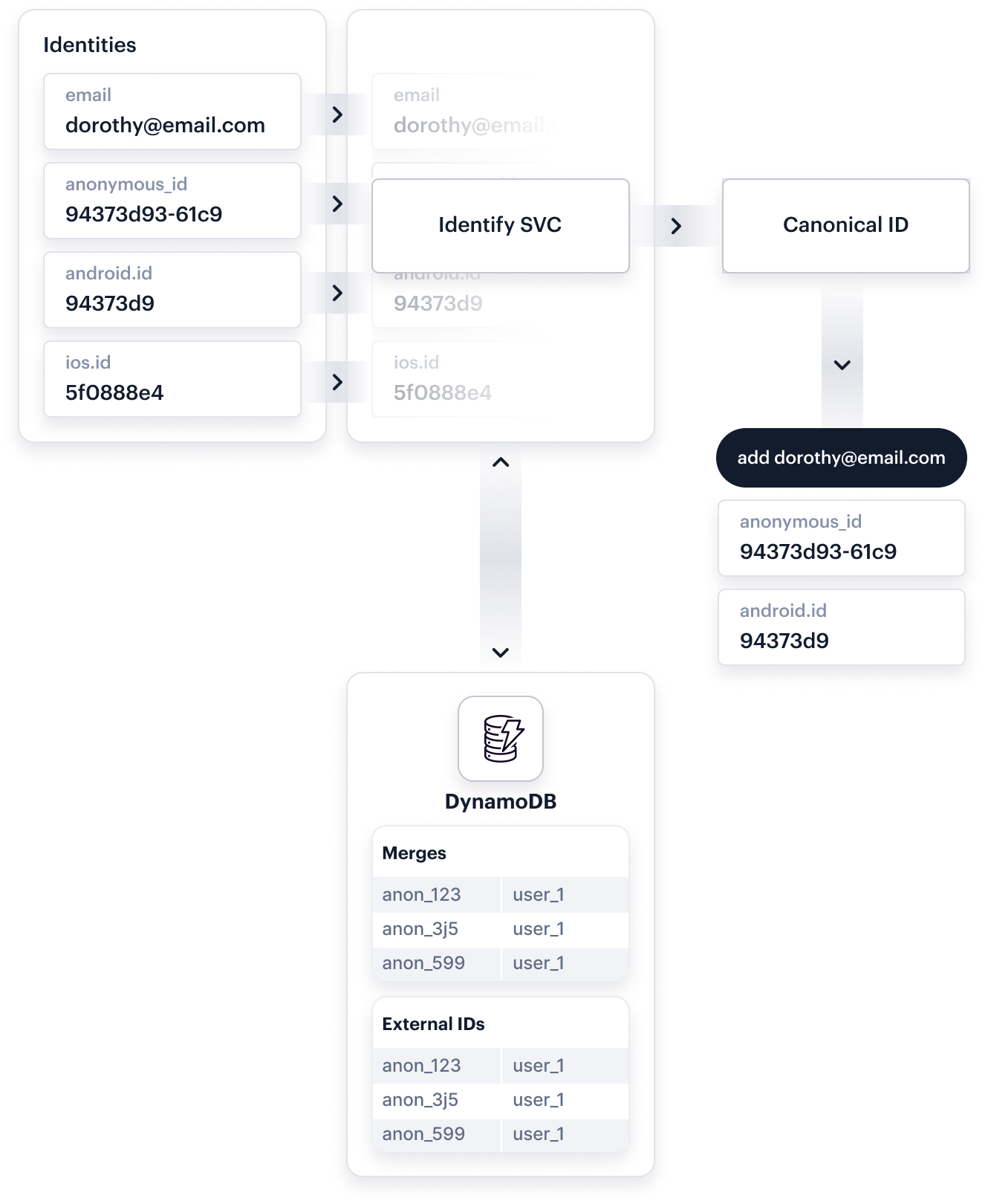

Aggregation

Identity Resolution

Emails, device IDs, primary keys in your database, account IDs—a single user might be keyed in hundreds of different ways! You can’t just overwrite those ties or endlessly traverse cycles in your identity graph. You need a bullet-proof system for tying those users together. Here’s how we do it.

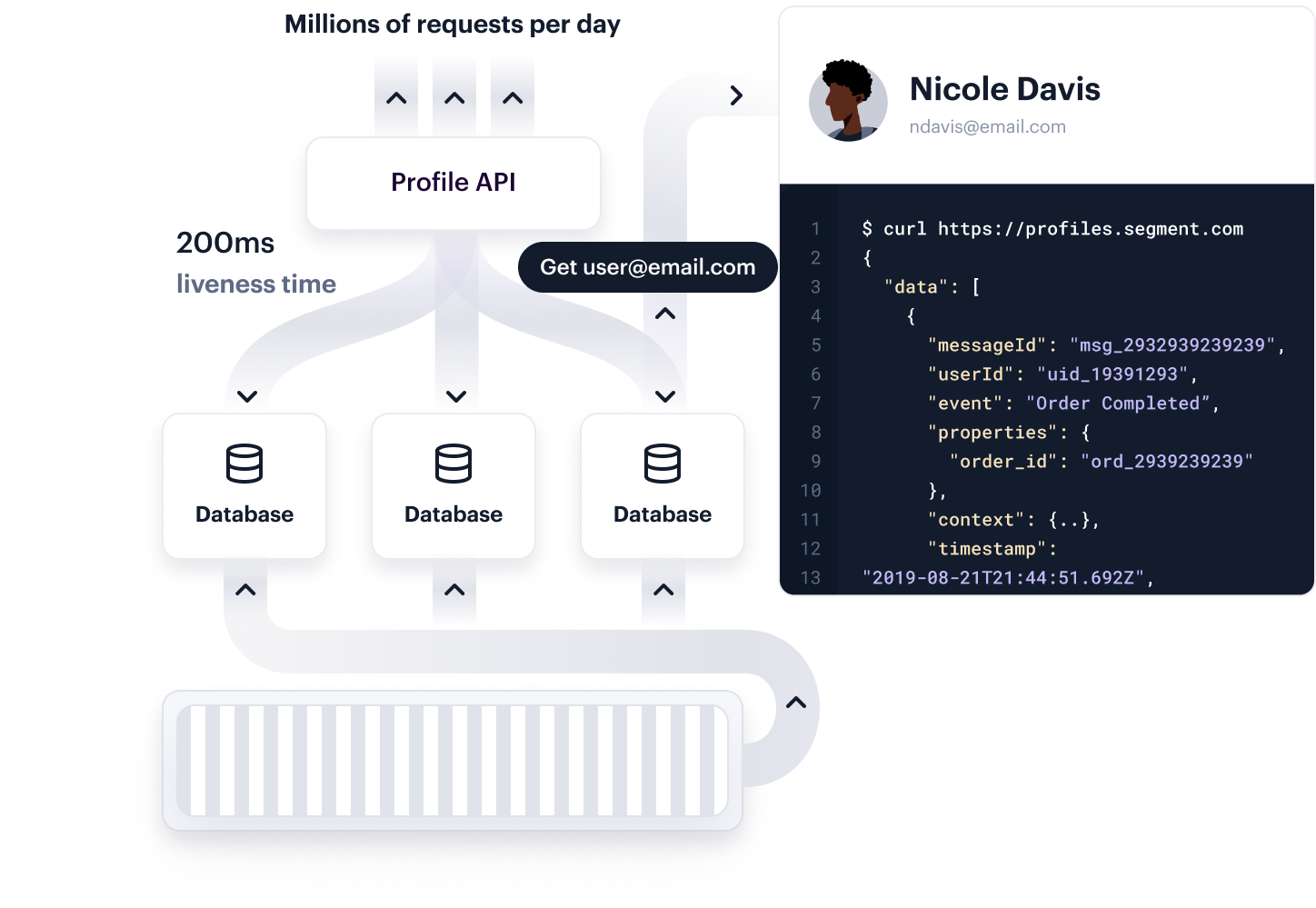

Aggregation

Profile API

Personalizing your website isn’t easy. You need millisecond response times to be able to get the page to load quickly. You need a full event history of your user. And you need to be able to make those decisions as the page is loading. Here’s how we do it.

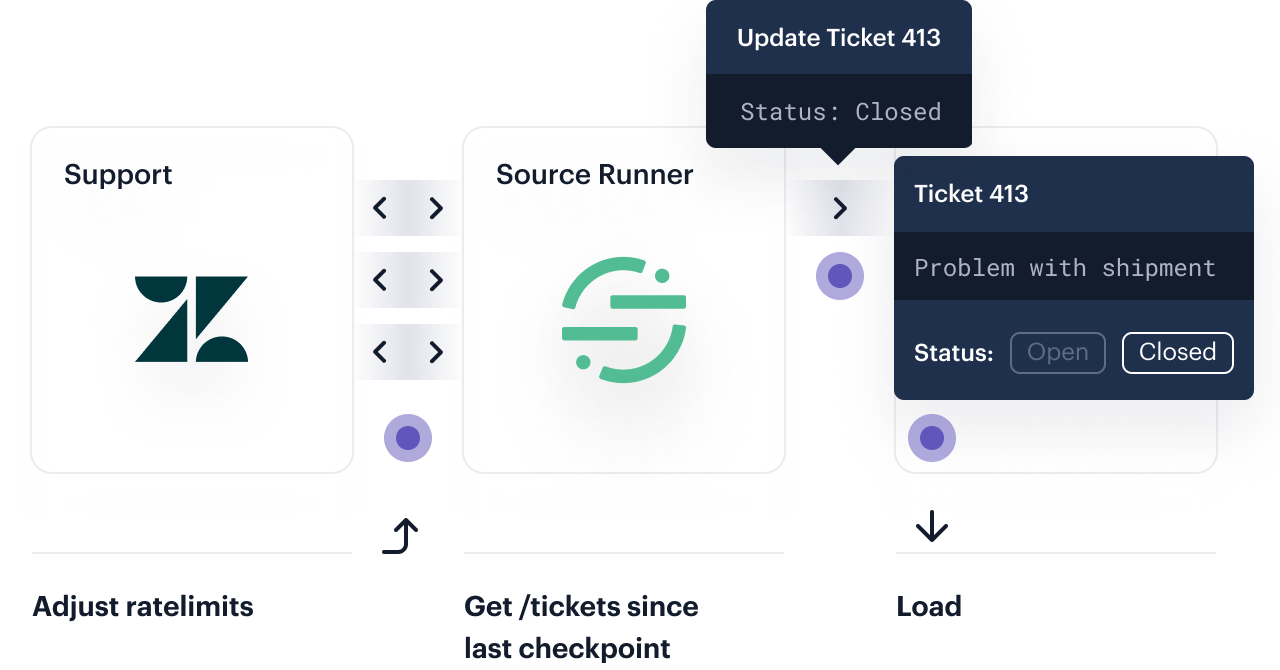

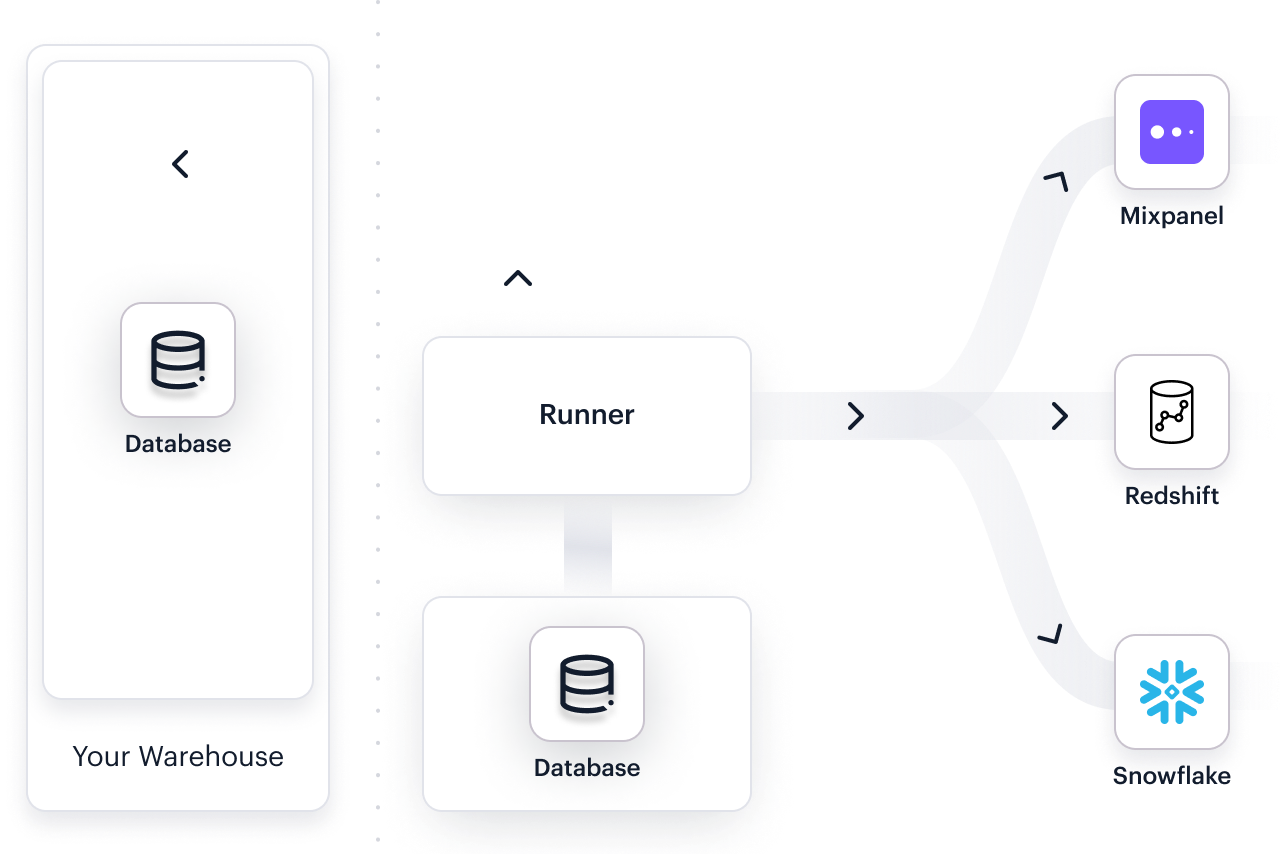

Warehouse data activation

Reverse ETL

Bring enriched data from your warehouse and activate it anywhere. Reverse ETL makes it easy for data teams to get marketers access to the data they need to build.

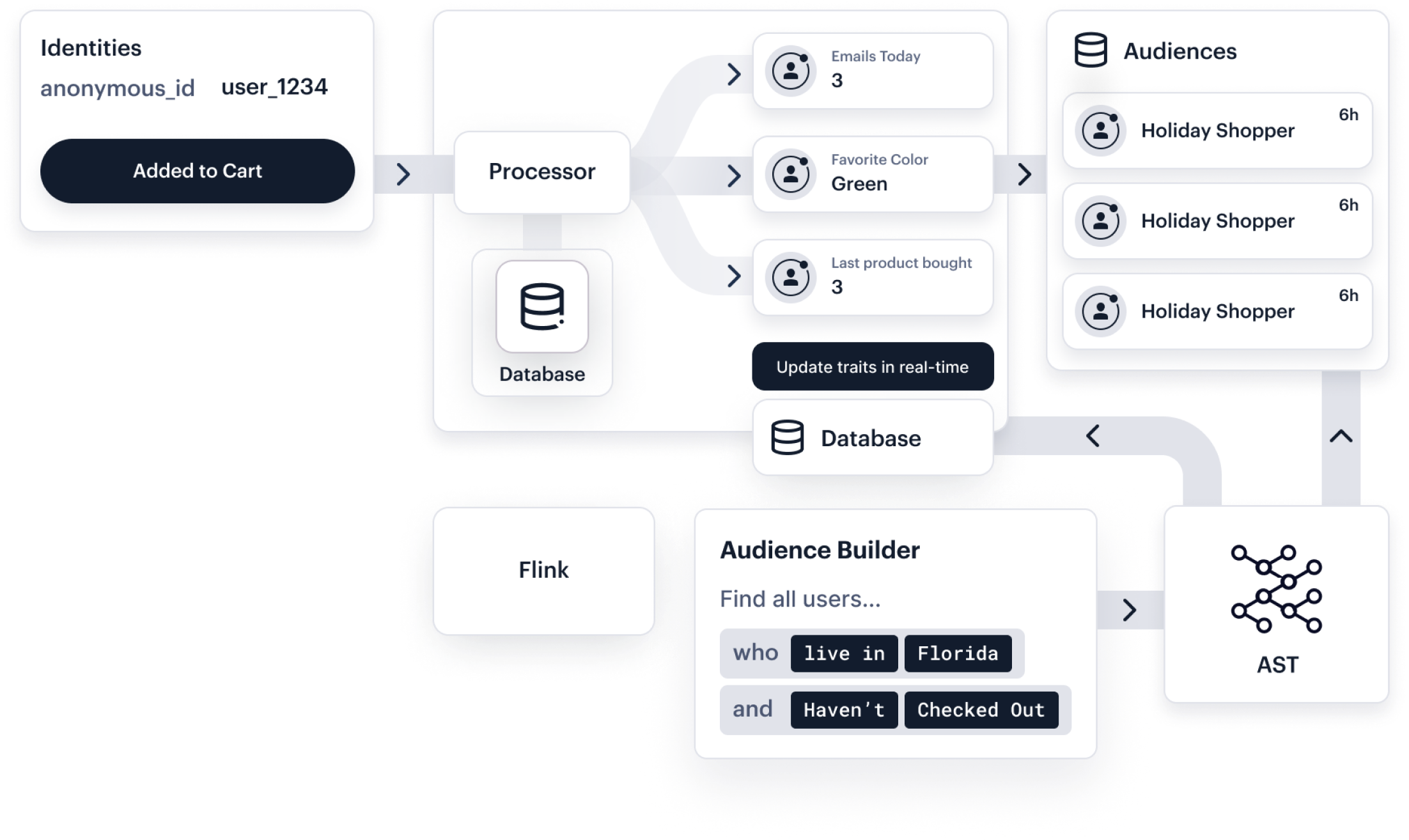

Aggregation

Audience Creation

Searching through billions of user actions to find who loaded a particular page more than 3 times in a month isn’t easy. You have to run complex aggregations, set up your data structure so they can be quickly and efficiently queried, and run it all on core infra.

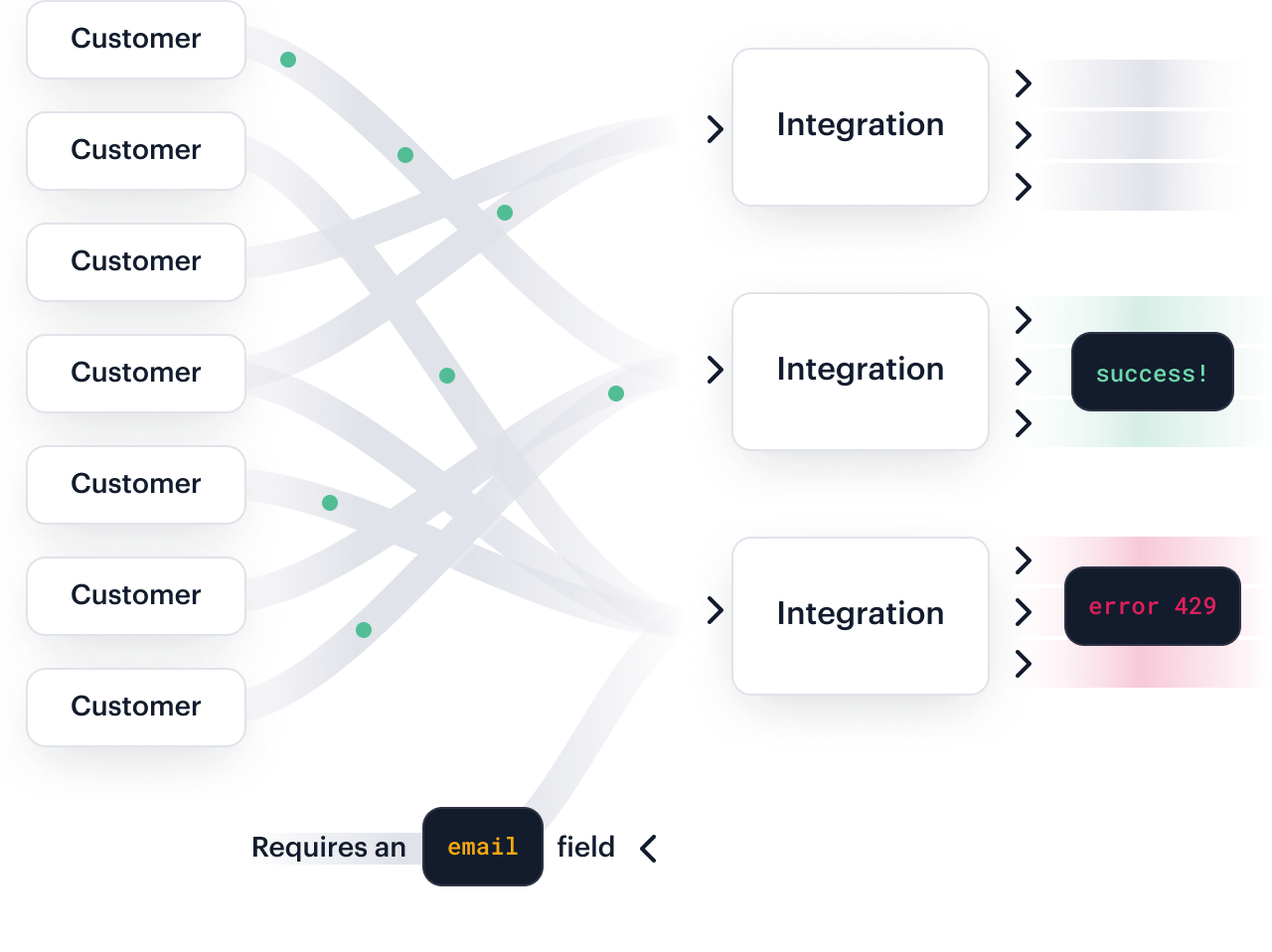

Delivery

Once you finally have data in one spot, there’s one last step—using that data. That means getting it into all of the different consumers and end tools.

In a controlled environment, this is easy. But over the open internet, it’s anything but.

You’re connecting to dozens of APIs. What if one fails? What if it gets slow? What if an API mysteriously changes? How do you ensure nothing goes missing?

You have all sorts of semi-structured data. How do you map it into structured forms like a data lake or a data warehouse.

It’s not easy, but here’s how we’ve built it.

Delivery

Centrifuge

APIs fail. At any given time, we see dozens of endpoints timing out, returning 5xx errors, or connection resets. We built Centrifuge, infrastructure to reliably retry and deliver messages even in cases of extreme failure. It brings up our message delivery by 1.5% on average.

Delivery

Destinations

Writing integrations is fussy. There’s new APIs to learn, tricky XML and JSON parsing, authentication refreshes, and mapping little bits of data. Often these APIs have little documentation and unexpected error codes. We’ve built out our destinations to handle all of these tiny inconsistencies at scale.

Delivery

Warehouses / ETL

Most companies load data into a data warehouse. It’s the source of truth for various analytics deep dives. Getting data in there can be challenging, as you need to consider schema inference, data cleaning, loading at off peak hours, and incrementally syncing new data. Here’s how we do it.

Getting started is easy

Start connecting your data with Segment.