Scaling security services with AWS organizations

How we improved Segment's security posture by adding AWS organizations to our AWS security services implementation

How we improved Segment's security posture by adding AWS organizations to our AWS security services implementation

Segment’s cloud infrastructure is mostly on AWS. We use dozens of accounts for Production, Stage, Development and even for interviewing engineers! We believe that the future of secure cloud computing is in isolating services and workflows in AWS accounts, but it comes with a number of challenges for our Security Teams. Earlier this year, we tackled the problem of managing AWS services like CloudTrail or GuardDuty while using multiple AWS accounts. When we started the project, we had trouble answering basic questions like:

Do we have CloudTrail enabled on this account?

Is GuardDuty running on this account and this region?

When we looked closely at our existing CloudTrail and GuardDuty configuration, we identified the following issues:

Having a duplicate CloudTrail Trail for DataPlane events was costing $10K/month.

Some CloudTrail Trails were created manually using the AWS Console, so we didn’t even know they existed.

GuardDuty was not enabled by default on new AWS accounts, so our Incident Response team had blind spots across the AWS Organization.

With that in mind, we started thinking about what we could do to fix, and prevent these issues a year down the road. In this post we’ll talk about our old AWS security services configuration back in the day, and explain how we now use AWS Organizations to reduce maintenance work, increase coverage, and sleep better at night.

We’ll also cover how Segment uses Infrastructure as Code to configure and maintain these services, and talk about some of the problems we had to deal with.

Segment uses an Infrastructure as Code approach to configure and maintain our AWS infrastructure. If you’re not familiar with the idea of “infrastructure as code”, it’s an approach which allows you to manage infrastructure using text-based configuration files. This means you can manage your infrastructure code in a versioned central repository like Github.

This approach makes infrastructure deployments easy to repeat while reducing human errors by eliminating typos. Because the configurations are in a versioned repository, it also makes it easy to roll back to a previous configuration if something goes wrong. It also helps you speed up deployment through automation - you can spin up entire stacks in seconds using your saved config files.

IaC is a great foundational approach, but it only gets you so far. You still need good organization, monitoring, and alerting, to make sure your infrastructure is secure.

AWS announced AWS Organizations in February 2017. These were intended to make billing easier by having a single AWS account that pays for all other accounts.

Initially, Segment only used the billing part of organizations, but as we imported AWS accounts into Infrastructure as Code, we discovered some of the additional benefits of AWS Organizations.

Organizational Units: The concept of an Organizational Unit is very simple, it’s just a way of grouping accounts. You could create an organizational unit called “Production” that contains all your production AWS Accounts. Organizational Units can be nested up to 5 layers deep.

Service Control Policies: Organizational units alone don’t bring any actual benefits aside from “organizing things”. The real benefit we got was enabling Service Control Policies. These policies are very similar to IAM policies, with a few extra restrictions we won’t cover in this post. Service Control Policies or SCPs are applied on to Organizational Units and apply to the AWS accounts that are included in that Organizational Unit. Let’s say we have an SCP like this one:

This policy, if applied to an OU, would Deny any of the AWS accounts within the OU the ability to create an S3 bucket, regardless of the permissions in the account’s IAM Role. Not even administrators in that account can create S3 buckets.

With that in mind, we began planning which AWS APIs to restrict across accounts, prioritizing APIs that would put Segment at risk if the AWS account was compromised.

We applied some of the recommended SCPs AWS provides, but we also developed specific policies that apply to Segment’s specific infrastructure configuration.

Segment manages AWS Accounts, OUs and SCPs using Terraform. The example below shows an OU with its SCP, where we (among other actions) deny access to the AWS account Root user and also prevent the AWS accounts from leaving the Organization.

When we add a new AWS Account, we use a terraform module to specify the Organizational Unit the new account belongs to, as in this example.

And that’s it. It’s a lot easier to understand the organization structure now that all accounts, Organizational Units and Service Control Policies are in the same Github repository. Since Service Control Policies are inherited through parent Organizational Units, we have a different set of restrictions on the Services Control Policies for our Production, Stage and Dev accounts, and all of them share a common SCP from their parent OU, the Engineering OU, which has a set of denies that apply to all the AWS Accounts.

This structured approach facilitates adding new AWS accounts to the right OU, applying default restrictions depending on its use case.

AWS CloudTrail is an AWS service that can log all management and data plane actions in your AWS Account.

It’s a good idea to use Terraform to deploy CloudTrail since it’s critical for security., We want to ensure that all accounts have appropriate CloudTrail coverage, and that new AWS accounts are automatically provisioned.

Before CloudTrail for Organizations was a “thing”, we would use a CloudTrail terraform module that we had to apply to each new account:

New accounts logged to the same S3 bucket with its own prefix, but it was difficult to confirm that all trails were working as expected as we increased the number of AWS accounts. We also found that sometimes we had duplicate trails, since one had been created manually, before the terraform module, and forgotten about.

AWS GuardDuty is a AWS service that generates alerts based on CloudTrail, Flow Logs and DNS queries.

If you have more than one AWS account, you usually want to have one main AWS account that receives GuardDuty alerts from the rest of the accounts in the Organization. But this can be difficult as you increase the number of accounts over time.

These are the steps that we had to go through to have multiple accounts reporting to a main GuardDuty deployment:

To start with, we did this on the main account:

Create a GuardDuty detector on each region.

Send an invitation to each GuardDuty detector on other accounts. So if you have five AWS accounts, the number of invitations to send is =

number of regions * (number of accounts - main account). That’s 16 * 4 = 64 invitations.

Then, on the secondary accounts:

Create a detector on each region.

Accept each invitation to start sending alerts to the main account.

This process was a manual nightmare, and not scalable. One of our engineers used Terraform to automate the process of creating GuardDuty detectors and sending invitations to each one of them from the main account. We still had to run the Terraform module every time we added an account, and we also still had to log in to each account to accept each invitation. Even with Infrastructure as Code it wasn't a great experience.

Since this configuration is quite static, and detectors are unlikely to change over time, it makes you wonder the benefits of setting it all up using Terraform. These types of services only need to be set up once, then you can forget about them. The important thing is to know that the service hasn’t been modified, and is still enabled. If we could set up the service and then apply a Service Control Policy to deny write access to these resources, we could have the assurance we need that these services are always running.

In April 2020, AWS launched GuardDuty for Organizations to simplify threat detection across all your AWS accounts.

When you enable this AWS Organization Feature, every time a new account is provisioned in your organization, AWS automatically creates a GuardDuty detector for the account.

With this change, we can simplify our configuration. Now, the only detectors we keep in Terraform are the ones we use on our delegated GuardDuty administrator account. We create detectors on all regions and turn on auto-enable, so any new AWS accounts in our AWS Organization automatically arrive with GuardDuty set up and working.

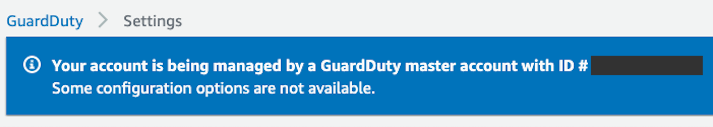

All AWS accounts have GuardDuty detectors that are managed by the main account, so it’s not possible to suspend them. Therefore having assurance that these resources are not going to be modified by account administrators or an eventual attacker.

On top of that, we use Service Control Policies to limit the Write actions allowed on GuardDuty.

AWS released CloudTrail for Organizations in November 2019.

Similar to GuardDuty Organizations, with CloudTrail Organizations you no longer need to manage individual instances of your child accounts.

After you enable CloudTrail for Organizations on the main account, you can create a CloudTrail Trail set as an Organization Trail:

To cover Data Plane events on data stores across your organization, you can create a Trail to cover them:

With this configuration, we no longer had to worry about managing individual trails or provisioning Trails on new accounts. All new accounts come with CloudTrail automatically enabled and configured with the same values as your organizational trail.

Similar to GuardDuty, CloudTrail Trails on child accounts can’t be modified, assuring coverage across your organization.

We recommend that you use AWS Organizations and enable these features. This approach improves your security posture, and frees engineering time, since you no longer need to do maintenance work on AWS GuardDuty, AWS CloudTrail, as well as using Service Control Policies to further restrict services within your accounts.

For us at Segment, we’re continuing to iterate on Service Control Policies to make sure we implement a “least-privilege” approach on new AWS Accounts, and focusing on limiting the number of AWS Services available to each AWS Account.

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.