Revamping Segment’s Flink real-time compute platform

Twilio Segment's shift to a Kubernetes-based platform transforms real-time computing, streamlining operations and empowering developers with heightened flexibility and efficiency.

Twilio Segment's shift to a Kubernetes-based platform transforms real-time computing, streamlining operations and empowering developers with heightened flexibility and efficiency.

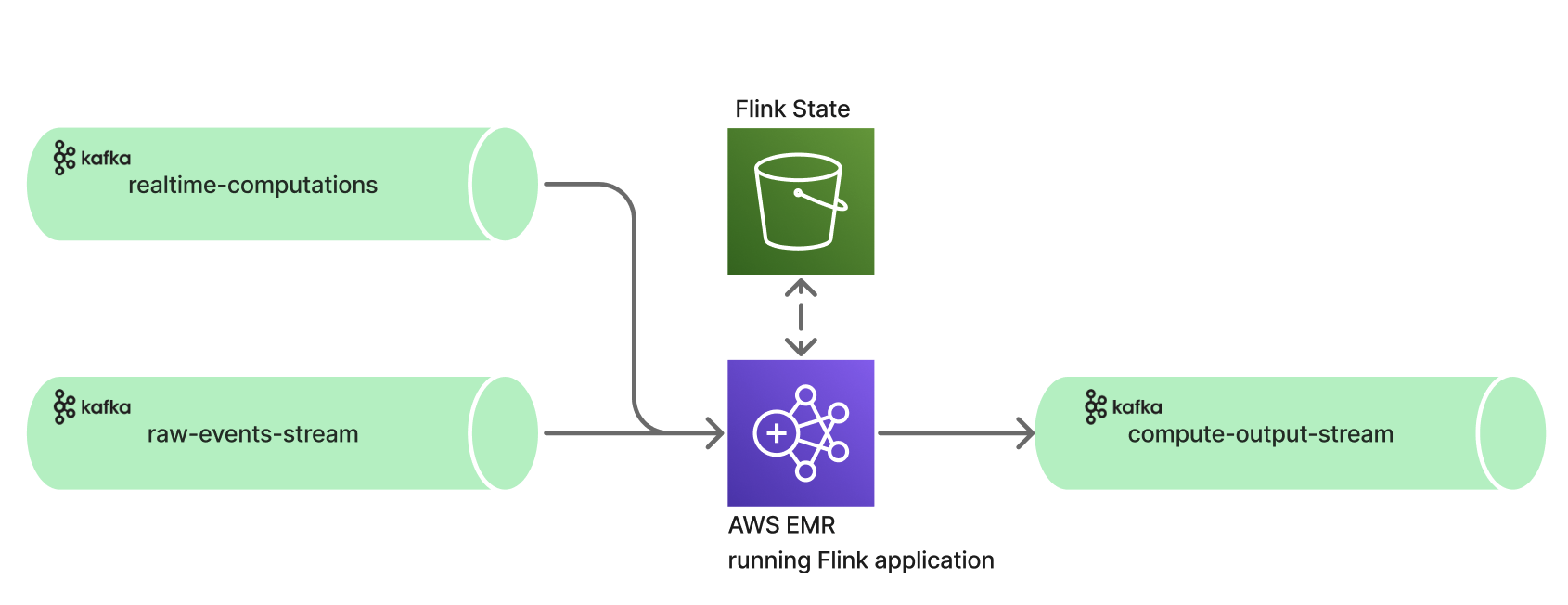

At the core of Twilio Segment is our real-time compute system that processes millions of messages per second and computes audiences and traits. This system leverages Apache Flink as its stream compute processing system. We had this system running on AWS EMR for about three years and we recently revamped our platform by migrating these workloads to a Kubernetes system (AWS EKS).

In this blog post, we will take a closer look at Twilio Segment’s Flink Real-time compute system from a platform perspective, and share how we enhanced the developer experience, reduced operational overhead, and also brought down infrastructure spending by migrating the applications to a Kubernetes based platform.

In our legacy setup, we were leveraging Amazon EMR to run our Flink applications. Amazon EMR supports Apache Flink as a YARN application so that you can manage resources along with other applications within a cluster. Flink-on-YARN allows you to submit transient Flink jobs, or you can create a long-running cluster that accepts multiple jobs and allocates resources according to the overall YARN reservation. Apache Flink is included in Amazon EMR release versions 5.1.0 and later.

Platform:

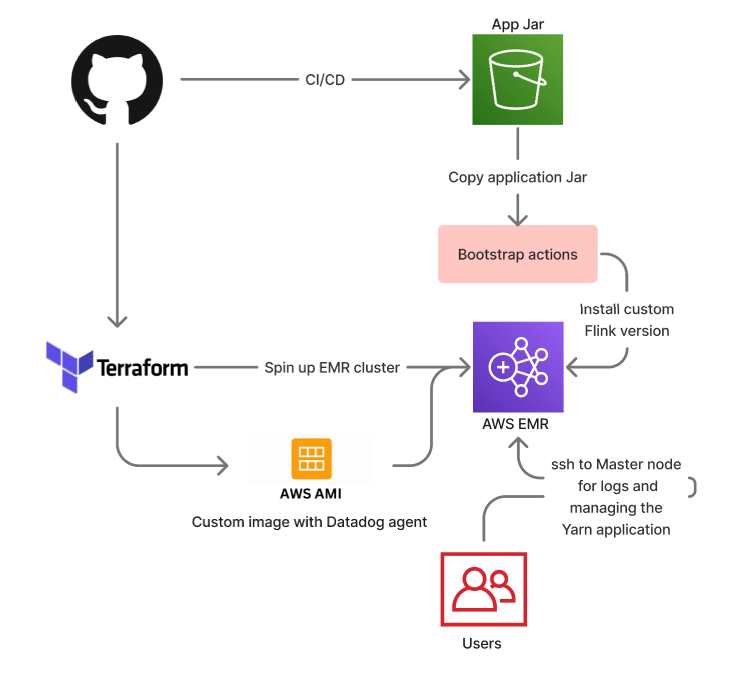

The Flink version is tied to the underlying AWS EMR version. There is no native support for custom Flink versions - In this setup, the EMR bootstrap script installs a custom Flink version on EMR nodes

There is no automatic recovery of the application if one of the nodes in EMR fails due to an underlying hardware issue

EMR only supports limited EC2 instance family types

We had to build a custom AMI to support installation of Datadog agents for metric collection

Deployment process:

Updating Flink application lifecycle (stopping/restarting) required users to SSH into EMR EC2 node and running YARN commands

Updating Application jar required manually killing the Yarn session, copying new JAR via scp or s3 cp and running the script with updated JAR

Troubleshooting failures

Application logs are not stored beyond the lifecycle of the cluster

Troubleshooting required SSH into EMR EC2 nodes

Accessing Flink UI requires SSH tunneling

Cost

AWS EMR imposes additional cost on top of standard EC2 pricing.

To amplify the pain points, we had multiple Flink/EMR clusters in our production operating on different sets of computations. Users had to repeat the process for each cluster with the lack of automation. On top of that, we were spending approximately $250,000 a year on EMR surcharge alone.

After some initial research, we found out that Flink Kubernetes Operator route of deploying Flink applications on Kubernetes solves most of the pain points with the current setup of running Flink on EMR without re-architecting or migrating to a different solution altogether. It was also an easy pick as Segment has an amazing set of tooling for deployments, observability built around Kubernetes, and we could take advantage of these solutions right out of the box.

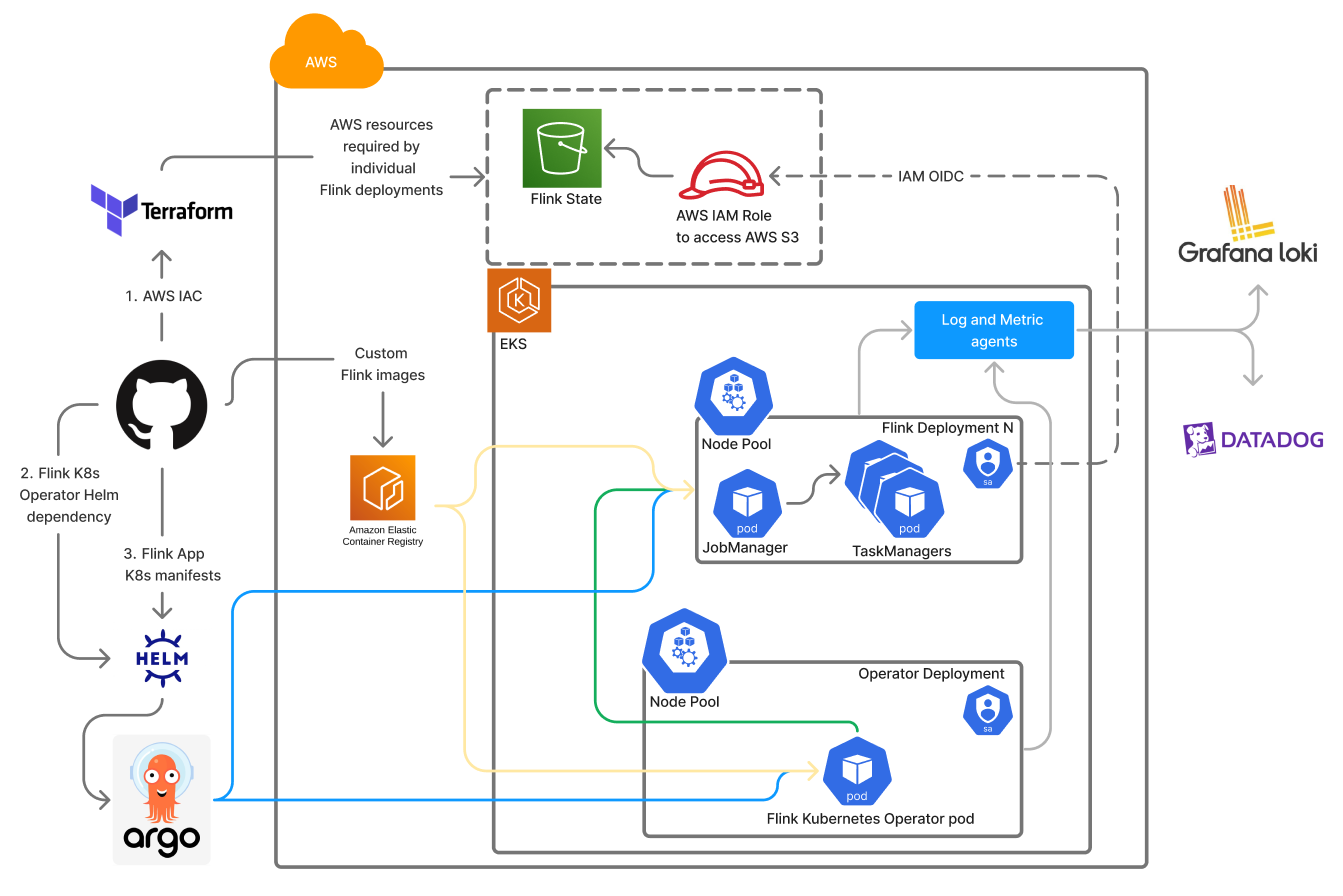

This is a high level break-down of our new setup.

Terraform is used for Infrastructure setup on AWS. This includes EKS cluster, EC2 node pools in EKS, AWS S3 buckets for Flink state, AWS IAM roles for access to S3, and other resources.

Integrate Flink TaskManager, JobManager, and Operator pods with our observability stack to ship logs and metrics to Grafana Loki and DataDog respectively.

Each Flink application was launched with its dedicated EKS node pool, namespace, and service account for better isolation. We could accommodate multiple applications within a single EKS cluster with one Flink Kubernetes operator managing all the applications.

With a highly available and more reliable setup, there was very minimal operational setup to maintain the Flink applications. Developers were able to customize and deploy new Flink applications within minutes in a more seamless fashion. The reduced infrastructure cost was also a huge win with the elimination of the AWS EMR surcharge.

Migrating this platform required few platform and Flink configuration enhancements. These were some highlights but we will not be going in detail in this article:

Flink configuration updates for improving checkpointing performance

Switch from flink-s3-fs-hadoop to flink-s3-fs-presto s3-file-systems-plugin. This required rigorous parameter tuning and performance tests as we have ~50TB state data on AWS S3 for every Flink cluster and this performance was key for keeping the downtimes shorter.

We had to introduce Entropy injection for S3 file-systems and request the AWS support team to partition our S3 buckets to improve the checkpointing performance without hitting the S3 rate limit errors.

Support additional Kubernetes Ingress with internal AWS Route53 domain to expose Flink queryable state service so that users can query the state of any Flink operator.

In this article, we briefly touched upon the legacy EMR based Flink setup, its pain points and how we solved these problems by migrating to a Kubernetes based platform. With the new platform, the deployment life-cycle of Flink applications is managed by Helm and ArgoCD.

We invested in developer experience leveraging all the amazing tooling we have around Kubernetes applications at Segment and that resulted in better developer outcomes and a more reliable platform. This migration also created a paved path that opened up opportunities for other teams and developers to onboard their Flink applications. Developers can easily create new Flink clusters by writing a few lines of YAML and handing it off to the automation.

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.