“How should I secure my production environment, but still give developers access to my hosts?” It’s a simple question, but one which requires a fairly nuanced answer. You need a system which is secure, resilient to failure, and scalable to thousands of engineers–as well as being fully auditable.

There’s a number of solutions out there: from simply managing the set of authorized_keys to running your own LDAP or Kerberos setup. But none of them quite worked for us (for reasons I’ll share down below). We wanted a system that required little additional infrastructure, but also gave us security and resilience.

This is the story of how we got rid of our shared accounts and brought secure, highly-available, per-person logins to our fleet of Linux EC2 instances without adding a bunch of extra infrastructure to our stack.

Limitations of shared logins

By default, AWS EC2 instances only have one login user available. When launching an instance, you must select an SSH key pair from any of the ones that have been previously uploaded. The EC2 control plane, typically in conjunction with cloud-init, will install the public key onto the instance and associate it with that user (ec2-user, ubuntu, centos, etc.). That user typically has sudo access so that it can perform privileged operations.

This design works well for individuals and very small organizations that have just a few people who can log into a host. But when your organization begins to grow, the limitations of the single-key, single-user approach quickly becomes apparent. Consider the following questions:

Who should have a copy of the private key? Usually the person or people directly responsible for managing the EC2 instances should have it. But what if they need to go on vacation? Who should get a copy of the key in their absence?

What should you do when you no longer want a user to be able to log into an instance? Suppose someone in possession of a shared private key leaves the company. That user can still log into your instances until the public key is removed from them. Do you continue to trust the user with this key? If not, how do you generate and distribute a new key pair? This poses a technical and logistical challenge. Automation can help resolve that, but it doesn’t solve other issues.

What will you do if the private key is compromised? This is a similar question as the one above, but requires more urgent attention. It might be reasonable to trust a departing user for awhile — but if you know your key is compromised, there’s little doubt you’ll want to replace it immediately. If the automation to manage it doesn’t yet exist, you may find yourself in a very stressful situation; and stress and urgency often lead to automation errors that can make bad problems worse.

One solution that’s become increasingly popular in response to these issues has been to set up a Certificate Authority that signs temporary SSH credentials. Instead of trusting a private key, the server trusts the Certificate Authority. Netflix’s BLESS is an open-source implementation of such a system.

The short validity lifetime of the issued certificates does mitigate the above risks. But it still doesn’t quite solve the following problems:

How do you provide for different privilege levels? Suppose you want to allow some users to perform privileged operations, but want others to be able to log in in “read-only” mode. With a shared login, that’s simply impossible: everyone who holds the key gets privileged access.

How do you audit activity on systems that have a shared login? At Segment, we believe that in order to have best-in-class security and reliability, we must know the “Five Ws” of every material operation that is performed on our instances:

-

What happened?

-

Where did it take place?

-

When did it occur?

-

Why did it happen?

-

Who was involved?

Only with proper auditing can we know with certainty the answers to these questions. As we’ve grown, our customer base has increasingly demanded that we have the ability to know, too. And if you ever find yourself coveting approval from such compliance organizations such as ISO 27001, PCI-DSS, or SOC 2, you will be required to show you have an audit trail at hand.

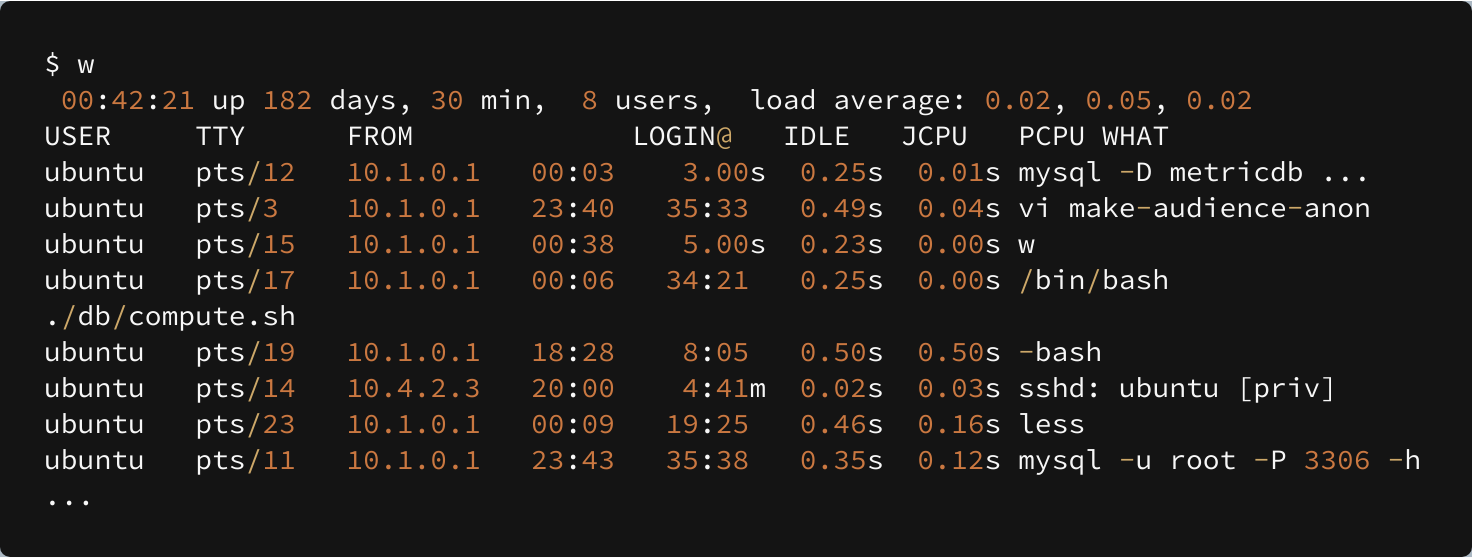

We needed better information than this:

Goals

Our goals were the following:

-

Be able to thoroughly audit activity on our servers;

-

Have a single source of truth for user information;

-

Work harmoniously with our single sign-on (SSO) providers; and

-

Use two-factor authentication (2FA) to have top-notch security.

Here’s how we accomplished them.

Segment’s solution: LDAP with a twist

LDAP is an acronym for “Lightweight Directory Access Protocol.” Put simply, it’s a service that provides information about users (people) and things (objects). It has a rich query language, configurable schemas, and replication support. If you’ve ever logged into a Windows domain, you probably used it (it’s at the heart of Active Directory) and never even knew it. Linux supports LDAP as well, and there’s an Open Source server implementation called OpenLDAP.

You may ask: Isn’t LDAP notoriously complicated? Yes, yes it is. Running your own LDAP server isn’t for the faint of heart, and making it highly available is extremely challenging.

You may ask: Aren’t all the management interfaces for LDAP pretty poor? We weren’t sold on any of them we’d seen yet. Active Directory is arguably the gold standard here — but we’re not a Windows shop, and we have no foreseeable plans to be one. Besides, we didn’t want to be in the business of managing Yet Another User Database in the first place.

You may ask: Isn’t depending on a directory server to gain access to production resources risky? It certainly can be. Being locked out of our servers because of an LDAP server failure is an unacceptable risk. But this risk can be significantly mitigated by decoupling the information — the user attributes we want to propagate — from the service itself. We’ll discuss how we do that shortly.

Choosing an LDAP service

As we entered the planning process, we made a few early decisions that helped guide our choices.

First, we’re laser-focused on making Segment better every day, and we didn’t want to be distracted by having to maintain a dial-tone service that’s orthogonal to our product. We wanted a solution that was as “maintenance free” as possible. This quickly ruled out OpenLDAP, which is challenging to operate, particularly in a distributed fashion.

We also knew that we didn’t want to spend time maintaining the directory. We already have a source of truth about our employees: Our HR system, BambooHR, is populated immediately upon hiring. We didn’t want to have to re-enter data into another directory if we could avoid it. Was such a thing possible?

Yes, it was!

We turned to Foxpass to help us solve the problem. Foxpass is a SaaS infrastructure provider that offers LDAP and RADIUS service, using an existing authentication provider as a source of truth for user information. They support several authentication providers, including Okta, OneLogin, G Suite, and Office 365.

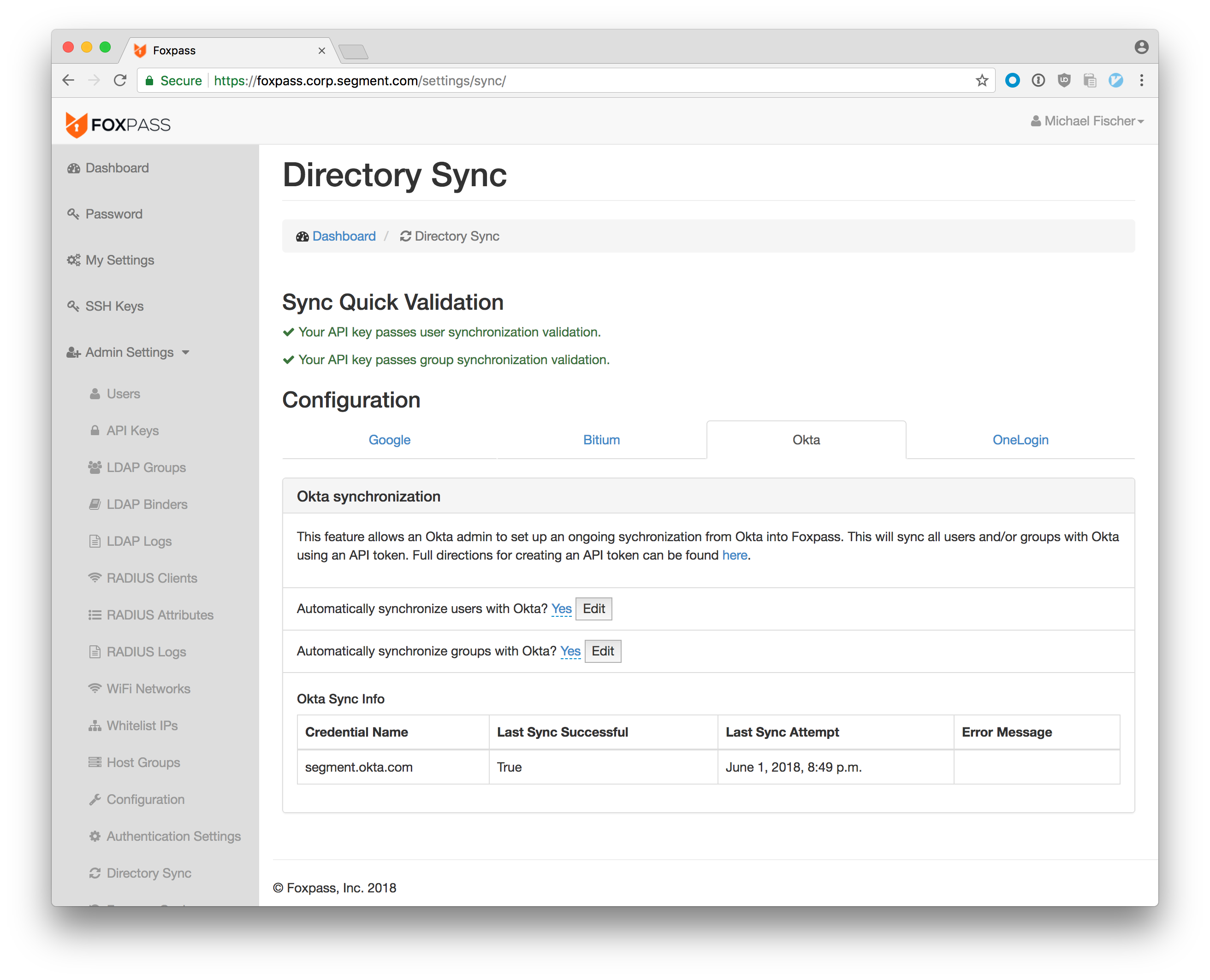

We use Okta to provide Single Sign-On for all our users, so this seemed perfect for us. (Check out aws-okta if you haven’t already.) And better still, our Okta account is synchronized from BambooHR — so all we had to do was synchronize Foxpass with Okta.

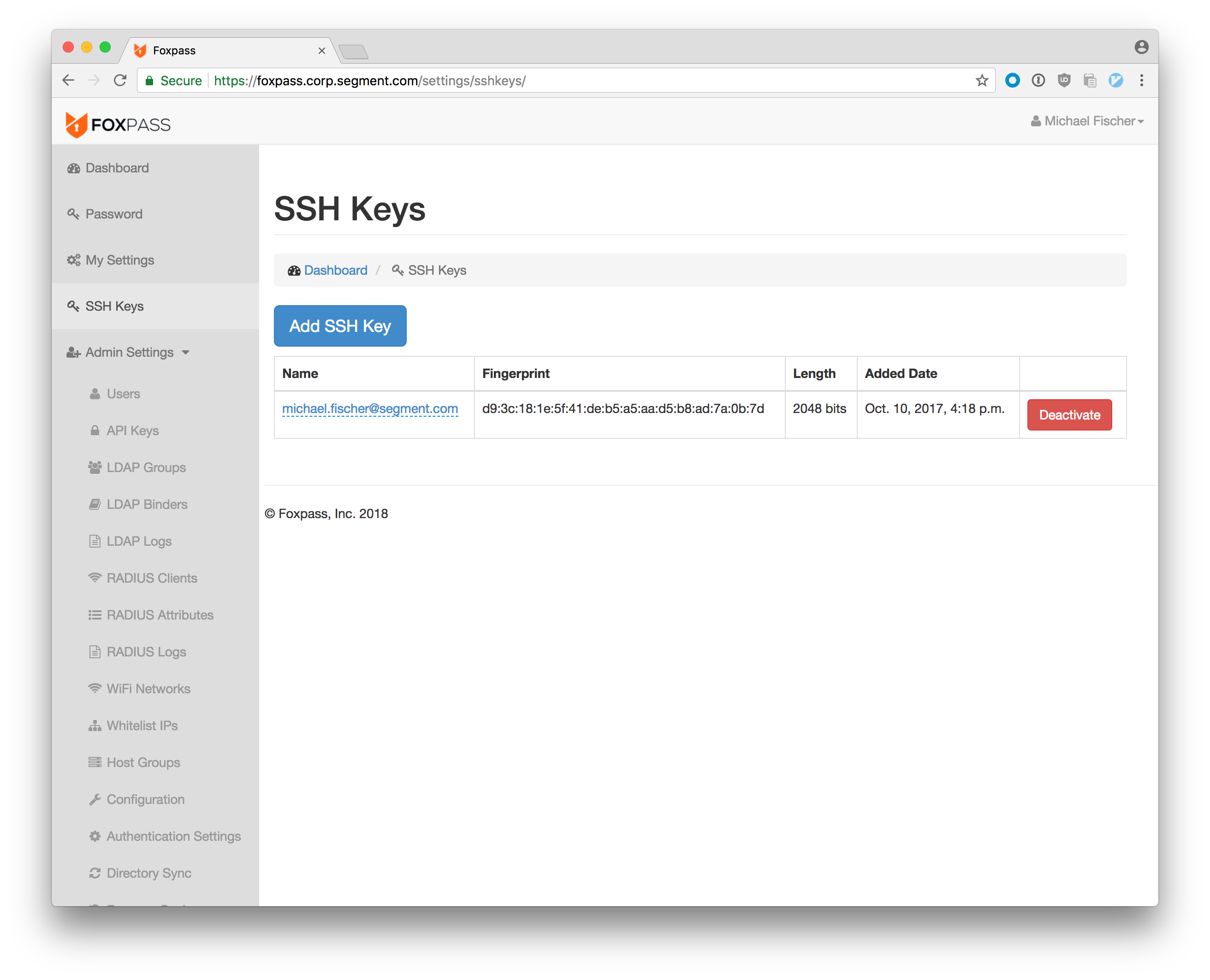

The last bit of data Foxpass needs are our users’ SSH keys. Fortunately, it’s simple for a new hire to upload their key on their first day: Just log into the web interface — which, of course, is protected by Okta SSO via G Suite — and add it. SSH keys can also be rotated via the same interface.

Service Architecture

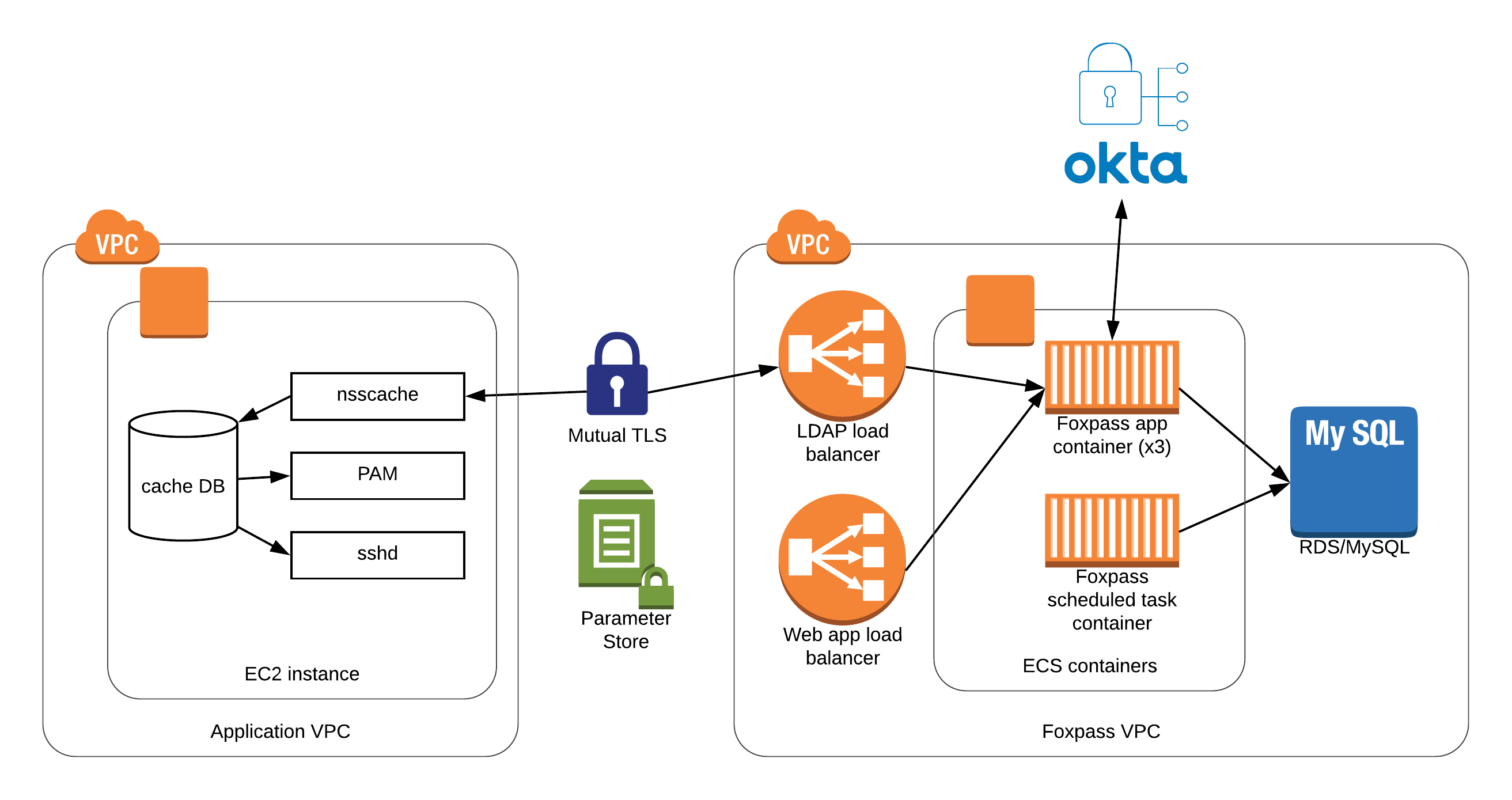

In addition to their SaaS offering, Foxpass also offers an on-premise solution in the form of a Docker image. This appealed to us because we wanted to reduce our exposure to network-related issues, and we are already comfortable running containers using AWS ECS (Elastic Container Service). So we decided to host it ourselves. To do this, we:

-

Created a dedicated VPC for the cluster, with all the necessary subnets, security groups, and Internet Gateway

-

Created an RDS (Aurora MySQL) cluster used for data storage

-

Created a three-instance EC2 Auto Scaling Group of instances having the ECS Agent and Docker Engine installed - if an instance goes down, it’ll be automatically replaced

-

Created an ECS cluster for Foxpass

-

Created a ECS service pair for Foxpass to manage its containers on our EC2 instances (one service for its HTTP/LDAP services; one service for its maintenance worker)

-

Stored database passwords and TLS certificates in EC2 Parameter Store

We also modified the Foxpass Docker image with a custom ENTRYPOINT script that fetches the sensitive data from Parameter Store (via Chamber) before launching the Foxpass service:

Client instance configuration

On Linux, you need to configure two separate subsystems when you adopt LDAP authentication:

Authentication: This is the responsibility of PAM (Pluggable Authentication Modules) and sshd (the ssh service). These subsystems check the credentials of anyone who either logs in, or wants to switch user contexts (e.g. sudo).

User ID mappings: This is the realm of NSS, the Name Service Switch. Even if you have authentication properly configured, Linux won’t be able to map user and group names to UIDs and GIDs (e.g. mifi is UID 1234) without it.

There are many options for setting these subsystems up. Red Hat, for example, recommends using SSSD on RHEL. You can also use pam_ldap and nss_ldap to configure Linux to authenticate directly against your LDAP servers. But we chose neither of those options: We didn’t want to leave ourselves unable to log in if the LDAP server was unavailable, and both of those solutions have cases where a denial of service is possible. (SSSD does provide a read-through cache, but it’s only populated when a user logs in. SSSD is also somewhat complex to set up and debug.)

nsscache

Ultimately we settled on nsscache. nsscache (along with its companion NSS library, libnss-cache) is a tool that queries an entire LDAP directory and saves the matching results to a local cache file. nsscache is run when an instance is first started, and about every 15 minutes thereafter via a systemd timer.

This gives us a very strong guarantee: if nsscache runs successfully once at startup, every user who was in the directory at instance startup will be able to log in. If some catastrophe occurs later, only new EC2 instances will be affected; and for existing instances, only modifications made after the failure will be deferred.

To make it work, we changed the following lines in /etc/nsswitch.conf. Note the cache keyword before compat:

So that users’ home directories are automatically created at login, we added the following to /etc/pam.d/common-session:

nsscache also ships with a program called nsscache-ssh-authorized-keys which takes a single username argument and returns the ssh key associated with the user. The sshd configuration (/etc/ssh/sshd_config) is straightforward:

Emergency login

We haven’t had any reliability issues with nsscache or Foxpass since we rolled it out in late 2017. But that doesn’t mean we’re not paranoid about losing access, especially during an incident! So just in case, we have a group of emergency users whose SSH keys live in a secret S3 bucket. At instance startup, and regularly thereafter, a systemd unit reads the keys from the S3 bucket and appends them to the /etc/ssh/emergency_authorized_keys file.

As with ordinary users, the emergency user requires two-factor authentication to log in. For extra security, we’re also alerted whenever a new key is added to the S3 bucket via Event Notifications.

We also had to modify /etc/ssh/sshd_config to make it work:

Security

Bastion servers

Security is of utmost importance at Segment. Consistent with best practices, we protect our EC2 instances by forcing all access to them through a set of dedicated bastion servers.

Our bastion servers are a bit different than some in that we don’t actually permit shell access to them: their sole purpose is to perform Two-Factor Authentication (2FA) and forward inbound connections via secure tunneling to our EC2 instances.

To enforce these restrictions, we made a few modifications to our configuration files.

First, we published a patch to nsscache that optionally overrides the users’ shell when creating the local login database from LDAP. On the bastion servers, the shell for each user is a program that prints message to stdout explaining why the bastion cannot be logged into, and exits nonzero.

Second, we enabled 2FA via Duo Security. Duo is an authentication provider who sends our team push notifications to their phones and requires confirmation before logging in. Setting it up involved installing their client package and making a few configuration file changes.

First, we had to update PAM to use their authentication module:

Then, we updated our /etc/ssh/sshd_config file to allow keyboard-interactive authentication (so that users could respond to the 2FA prompts):

On the client side, access to protected instances is managed through a custom SSH configuration file distributed through Git. An example stanza that configures proxying through a bastion cluster looks like this:

Mutual TLS

To avoid an impersonation attack, we needed to ensure our servers connected to and received information only from the LDAP servers we established ourselves. Otherwise, an attacker could provide their own authentication credentials and use them to gain access to our systems.

Our LDAP servers are centrally located and trusted in all regions. Since Segment is 100% cloud-based, and cloud IP addresses are subject to change, we didn’t feel comfortable solely using network ACLs to protect us. This is also known as the zero-trust network problem: How do you ensure application security in a potentially hostile network environment?

The most popular answer is to set up mutual TLS authentication, or mTLS. mTLS validates the client from the server’s point of view, and the server from the client’s point of view. If either validity check fails, the connection fails to establish.

We created a root certificate, then used it to sign the client and server certificates. Both kinds of certificates are securely stored in EC2 Parameter Store and encrypted both at rest and in transit, and are installed at instance-start time on our clients and servers. In the future, we may use AWS Certificate Manager Private Certificate Authority to generate certificates for newly-launched instances.

Conclusion

That's it! Let's recap:

-

By leaning on Foxpass, we were able to integrate with our existing SSO provider, Okta, and avoided adding complicated new infrastructure to our stack.

-

We leveraged nsscache to prevent any upstream issues from locking us out of our hosts. We use mutual TLS between nsscache and the Foxpass LDAP service to communicate securely in a zero-trust network.

-

Just in case, we sync a small number of emergency SSH keys from S3 to each host at boot.

-

We don't allow shell access to our bastion hosts. They exist purely for establishing secure tunnels into our AWS environments.

In sharing this, we're hoping others find an easier path towards realizing secure, personalized logins for their compute instance fleets. Let us know if you have any thoughts or questions, feel free to tweet @Segment, and I’m michael@dynamine.net.

The State of Personalization 2023

Our annual look at how attitudes, preferences, and experiences with personalization have evolved over the past year.