Memory management in Golang can be tricky, to say the least. However, after reading the literature, one might be led to believe that all the problems are solved: sophisticated automated systems that manage the lifecycle of memory allocation free us from these burdens.

However, if you’ve ever tried to tune the garbage collector of a JVM program or optimized the allocation pattern of a Go codebase, you understand that this is far from a solved problem. Automated memory management in Golang helpfully rules out a large class of errors, but that’s only half the story. The hot paths of our software must be built in a way that these systems can work efficiently.

We found inspiration to share our learnings in this area while building a high-throughput service in Go called Centrifuge, which processes hundreds of thousands of events per second. Centrifuge is a critical part of Segment’s infrastructure. Consistent, predictable behavior is a requirement. Tidy, efficient, and precise use of memory is a major part of achieving this consistency.

In this post we’ll cover common patterns that lead to inefficiency and production surprises related to memory allocation as well as practical ways of blunting or eliminating these issues. We’ll focus on the key mechanics of the allocator that provide developers a way to get a handle on their memory usage.

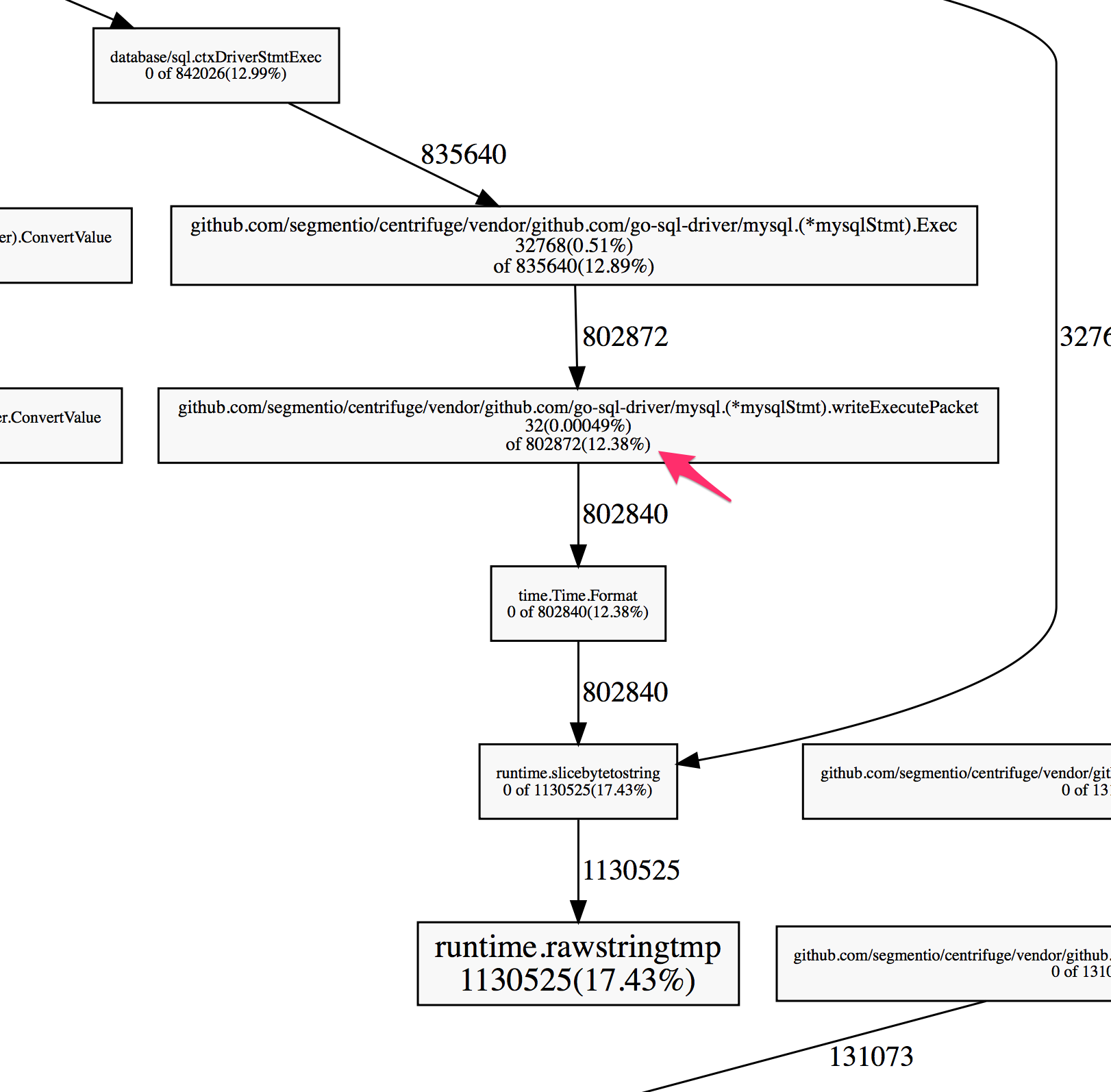

Our first recommendation is to avoid premature optimization. Go provides excellent profiling tools that can point directly to the allocation-heavy portions of a code base. There’s no reason to reinvent the wheel, so instead of taking readers through it here, we’ll refer to this excellent post on the official Go blog. It has a solid walkthrough of using pprof for both CPU and allocation profiling. These are the same tools that we use at Segment to find bottlenecks in our production Go code, and should be the first thing you reach for as well.

Use data to drive your optimization!

Analyzing Our Escape

Go manages memory allocation automatically. This prevents a whole class of potential bugs, but it doesn’t completely free the programmer from reasoning about the mechanics of allocation. Since Go doesn’t provide a direct way to manipulate allocation, developers must understand the rules of this system so that it can be maximized for our own benefit.

If you remember one thing from this entire post, this would be it: stack allocation is cheap and heap allocation is expensive. Now let’s dive into what that actually means.

Go allocates memory in two places: a global heap for dynamic allocations and a local stack for each goroutine. Go prefers allocation on the stack — most of the allocations within a given Go program will be on the stack. It’s cheap because it only requires two CPU instructions: one to push onto the stack for allocation, and another to release from the stack.

Unfortunately not all data can use memory allocated on the stack. Stack allocation requires that the lifetime and memory footprint of a variable can be determined at compile time. Otherwise a dynamic allocation onto the heap occurs at runtime. malloc must search for a chunk of free memory large enough to hold the new value. Later down the line, the garbage collector scans the heap for objects which are no longer referenced. It probably goes without saying that it is significantly more expensive than the two instructions used by stack allocation.

The compiler uses a technique called escape analysis to choose between these two options. The basic idea is to do the work of garbage collection at compile time. The compiler tracks the scope of variables across regions of code. It uses this data to determine which variables hold to a set of checks that prove their lifetime is entirely knowable at runtime. If the variable passes these checks, the value can be allocated on the stack. If not, it is said to escape, and must be heap allocated.

The rules for escape analysis aren’t part of the Go language specification. For Go programmers, the most straightforward way to learn about these rules is experimentation. The compiler will output the results of the escape analysis by building with go build -gcflags '-m'. Let’s look at an example: