Data Observability: A must-have guide for modern businesses

Dive into the essential strategies, tools, and best practices to ensure data observability and drive business success.

Dive into the essential strategies, tools, and best practices to ensure data observability and drive business success.

Data observability refers to the real-time monitoring of data as it flows through various pipelines. It’s concerned with maintaining the accuracy and reliability of an organization’s data, by providing transparency into how it’s governed throughout its lifecycle.

Data observability tools often provide instantaneous alerts to issues or anomalies as well, to help prevent bad data.

Take control of your data quality with intuitive features and real-time validation built for enterprise scale.

As organizations scale their systems, create more apps, and launch more revenue streams, the complexity of their IT environment grows. And with it comes an inundation of data, which can often lead to a fragmented view of their operations and customers.

One way to bridge the gap between data chaos and coherence is through data observability. This guide explores the fundamentals of data observability, its key pillars, and how implementing these principles can transform your data management practices for the better.

The main goals of data observability tend to be:

Real-time insights: Data observability facilitates access to real-time insights, empowering organizations to make informed decisions promptly.

Proactive issue identification: By proactively identifying issues, data observability helps prevent potential disruptions before they escalate, enhancing operational efficiency.

Streamlined data governance: Through comprehensive monitoring, data observability supports the enforcement of robust data governance practices, ensuring compliance and data integrity.

Informed strategic decisions: With a clear understanding of data health, organizations can make strategic decisions confidently, aligning their actions with business objectives and market trends.

The significance of data observability lies in its coverage of the data lifecycle. It equips data engineers and professionals with the ability to:

Monitor data sets in motion or at rest

Confirm data types and formats

Identify anomalies indicating potential issues

Track and optimize data pipeline performance

Data observability relies on five key pillars to ensure the health and effectiveness of a data ecosystem. Each pillar plays a crucial role in maintaining the integrity, reliability, and usefulness of data throughout its lifecycle.

Freshness concerns the timeliness of data. It assesses how quickly data is updated or ingested into the system, ensuring that insights are based on the most recent information. Monitoring data freshness enables organizations to make decisions based on up-to-date insights, enhancing agility and responsiveness in dynamic environments.

Quality pertains to the accuracy, completeness, and consistency of data. It involves evaluating data integrity, validity, and reliability to ensure that data is fit for its intended purpose.

By maintaining high data quality standards, organizations can mitigate the risks associated with erroneous or misleading information, fostering trust in data-driven decision-making processes.

Volume refers to the scale and magnitude of data. It involves monitoring the size and growth rate of datasets to ensure that infrastructure and resources can effectively handle data demands. Managing data volume enables organizations to optimize storage, processing, and analysis capabilities, ensuring scalability and efficiency in handling large datasets.

Schema defines the structure, format, and organization of data. It encompasses metadata such as data types, relationships, and constraints, providing a framework for interpreting and processing data effectively.

Monitoring schema consistency and evolution enables organizations to maintain data interoperability and compatibility across different systems and applications.

Lineage traces the origin, transformation, and movement of data throughout its lifecycle. It involves documenting data lineage to understand how data is generated, modified, and spread across various processes and systems.

By establishing data lineage, organizations can ensure data provenance, traceability, and compliance with regulatory requirements, enhancing transparency and accountability in data management practices.

Facing the challenges of data observability head-on is crucial for modern businesses aiming to leverage their data to its fullest potential. Here’s a look at the problems and potential solutions.

Ensuring data remains accurate, complete, and timely across diverse data ecosystems is a significant challenge. Bad data quality can lead to misguided decisions and undermine business operations.

Solution: Establish a robust data observability framework and maintain detailed documentation. This strategic foundation supports effective change management and ensures ongoing data integrity, enabling businesses to make decisions based on reliable data.

The integration of new systems into existing tech stacks introduces complexity, potentially disrupting data flow and system performance.

Solution: Embrace scalable and adaptable workflows for integrating new technologies. A deep understanding of existing and new systems can help with seamless integration, ensuring the data ecosystem remains coherent and functional.

Achieving real-time monitoring in the face of vast data volumes is taxing, with potential delays in identifying and addressing critical data issues.

Solution: Invest in advanced monitoring tools capable of providing real-time insights. Features such as anomaly detection and automated alerts are crucial for proactive issue resolution, maintaining operational efficiency, and supporting timely decision-making.

Balancing the need for observability with stringent data privacy and security requirements is increasingly difficult, especially with evolving regulatory laws.

Solution: Implement comprehensive security measures, including data encryption and strict access controls, to protect sensitive information. Ensuring observability practices comply with global data protection regulations is essential for safeguarding data while maintaining transparency and building customer trust.

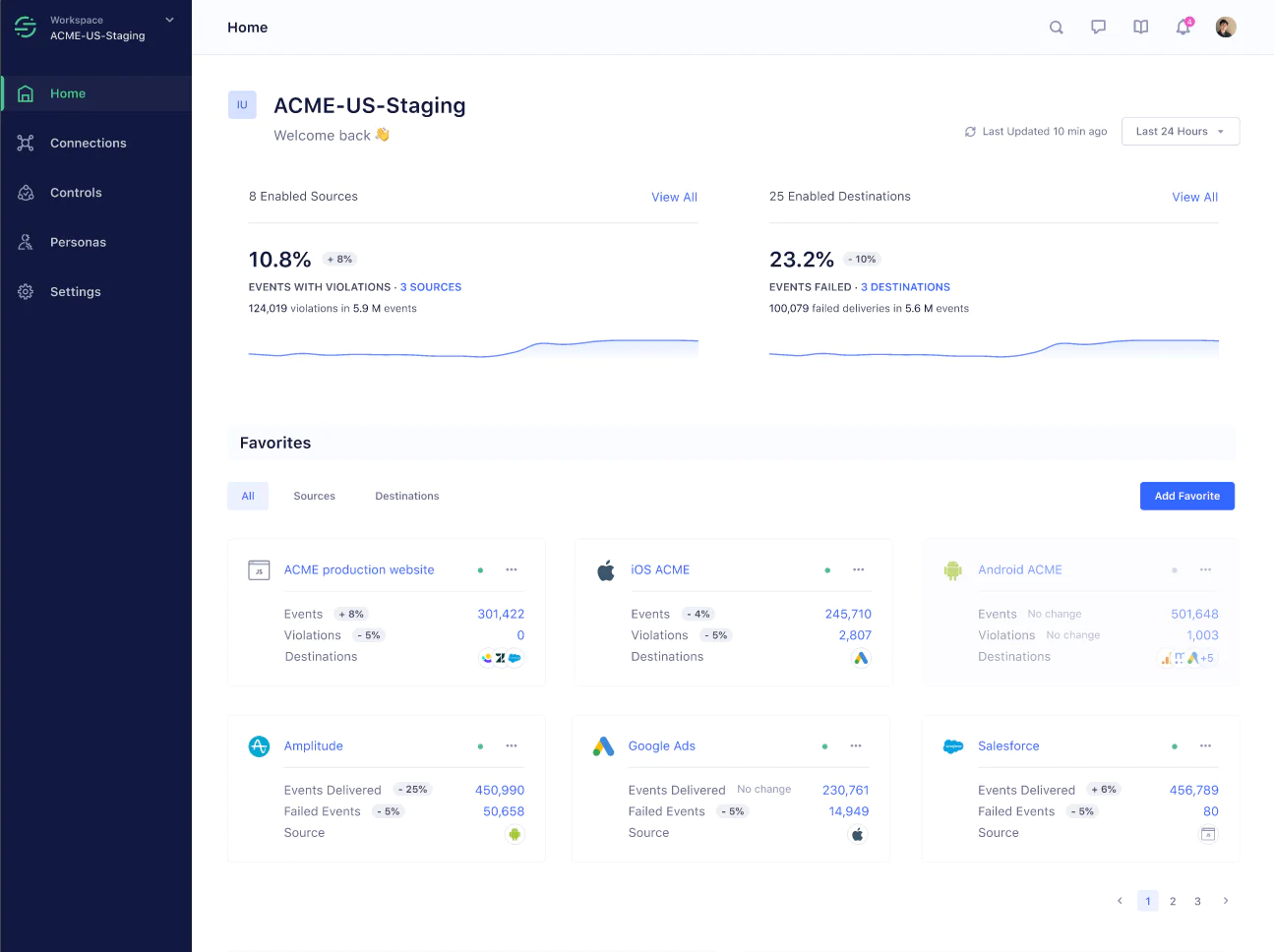

Segment makes it easy for users to get a clear picture of their data. And Segment Workspace is at the core of this.

Here, users quickly see what's happening with their data – spotting event audits, finding errors, and catching any violations. It's all about keeping things straightforward, offering the tools needed to keep your data in check. This feature is just a part of Segment's broad array of tools and capabilities designed to enhance data observability.

Twilio Segment's Public API is about making data observability straightforward and actionable. It seamlessly integrates Event Delivery metrics into tools like DataDog, Grafana, or New Relic, giving you a clear picture of event volumes and delivery success. This API isn't just for spotting issues – it's about proactively understanding your data flow and keeping everything running smoothly.

With the Public API, you're equipped to handle the complexities of modern data ecosystems, ensuring that every piece of data is accounted for and analyzed. It's perfect for teams looking to centralize their observability efforts, offering a detailed yet digestible view of data movements across your workspace.

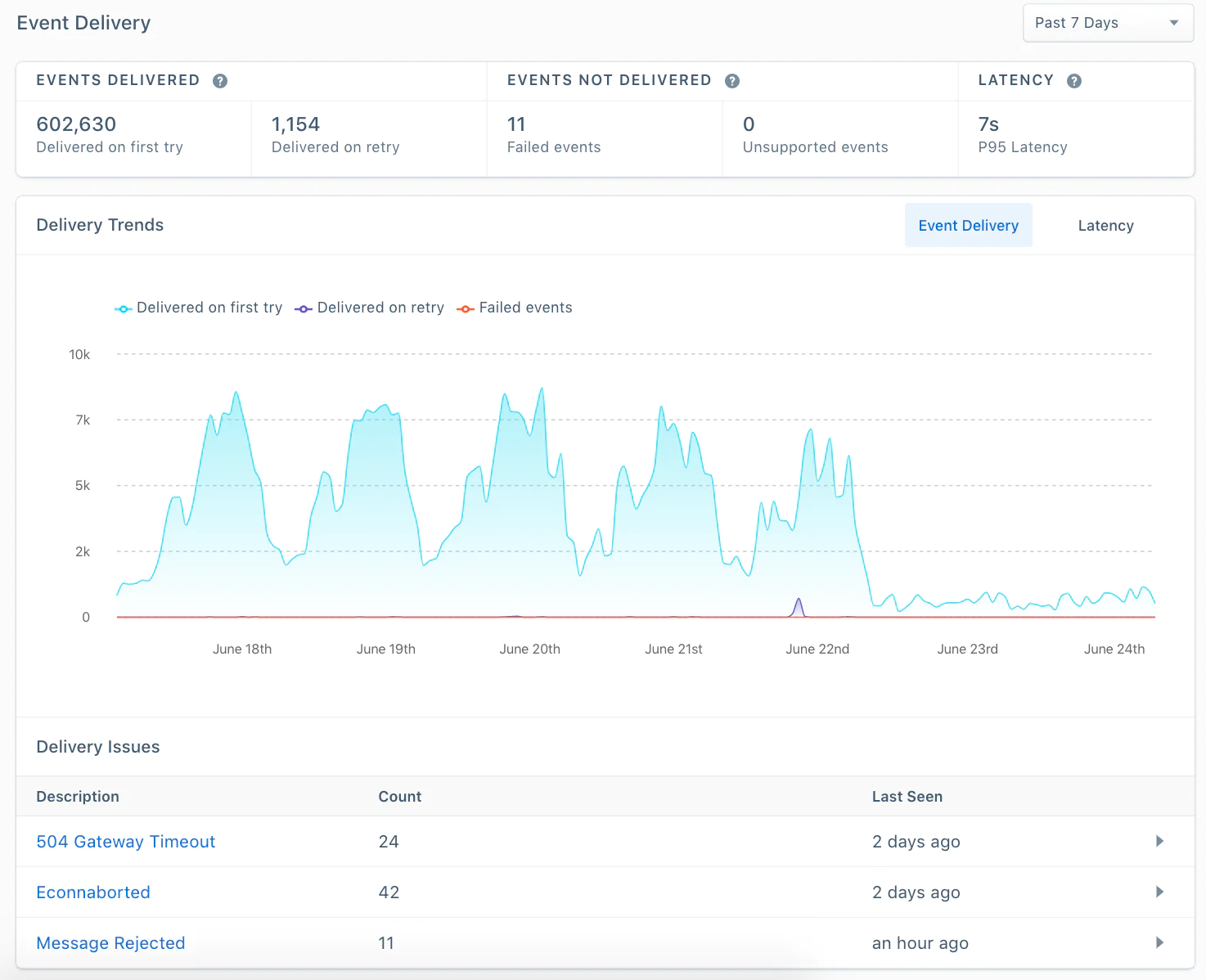

Twilio Segment's Event Delivery dashboard is designed to give you insights into whether your data is reaching its intended destinations and identify any issues Segment might encounter during data delivery. This feature is particularly valuable for server-side event streaming destinations, as it allows for detailed reporting on deliverability, which isn't available for client-side integrations due to their direct communication with destination tools' APIs.

When you’re setting up a new destination or if you notice data missing in your analytics tools, the Event Delivery dashboard offers near real-time insights into the flow of data to destinations like Google Analytics. This immediate feedback loop is vital for troubleshooting and ensuring that your setup is correct from the start.

The dashboard breaks down its reporting into three main areas:

Key metrics: Shows the number of messages delivered and not delivered and the P95 latency, providing a snapshot of data delivery performance.

Error details: Offers a summary of errors encountered with clickable rows for more detailed insights, including descriptions, actions to take, and links to helpful documentation.

Delivery trends: Helps you spot when issues begin, cease, or show patterns over time, aiding in quicker diagnosis and resolution of delivery problems.

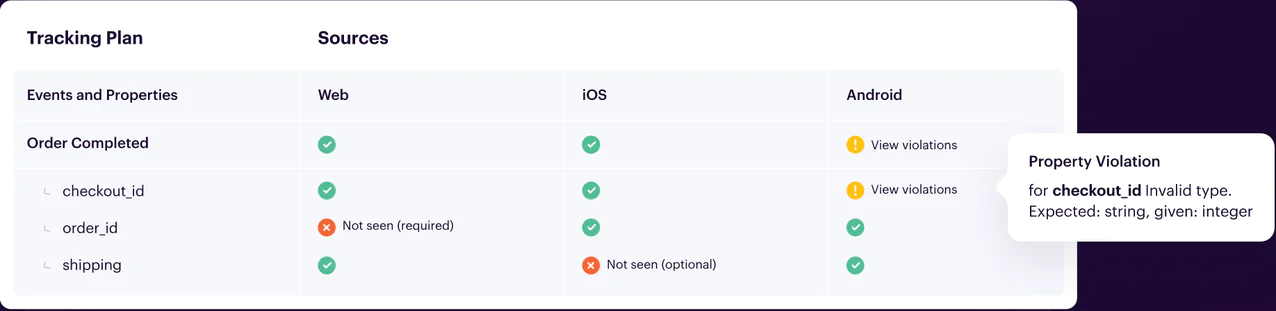

Segment Protocols makes it easy to keep your data clean and reliable. Just link your Tracking Plan to a Source, and you'll see a clear list of any event issues. This handy feature is right in the Schema tab – just look for the "Violations" button. You can even filter these violations by time, focusing on the last day, week, or month.

Segment spells out exactly what's wrong, be it missing data, incorrect data types, or values that just don't fit your rules. For any specific issue, a quick click shows you the payloads causing trouble so your engineering team can fix things quickly.

Want to stay on top of issues? Segment lets you forward these alerts, setting up custom notifications or deeper analysis to keep your data on track.

With Protocols by your side, Segment ensures your data stays spotless, supporting smooth and accurate tracking across your digital landscape. It’s all about making data management a breeze, helping you solve problems before they impact your operations.

The Source Debugger in Twilio Segment is designed to enhance your data observability by ensuring that API calls from your website, mobile app, or servers are successfully arriving at your Segment Source. This real-time tool is pivotal for swiftly troubleshooting your Segment setup, allowing you to verify the format of incoming calls without delay.

Key features:

Real-time verification: Instantly confirms the arrival of API calls to your Segment Source, streamlining the troubleshooting process.

Sampled event view: Provides a glimpse into the structure of incoming data through a sample of events, capped at 500, to test specific parts of your implementation.

Live stream with pause functionality: Displays a live stream of sampled events, with the option to pause the stream for detailed examination.

Search and advanced search options: Enables searching within the Debugger using event payload information with advanced options for more precise filtering.

Pretty and raw views: Offers two perspectives on the event data – the Pretty view for an approximate recreation of the API call and the Raw view for the complete JSON object received by Segment.

It's important to note that the Source Debugger does not provide an exhaustive record of all events sent to your Segment workspace. We recommend setting up a data warehouse or an S3 destination for a comprehensive view of all your events.

While off-the-shelf analytics tools like Google Analytics and Mixpanel provide quick insights, data analysts and data scientists require access to raw data for deeper insights. Segment offers various Storage Destinations to meet these needs, including Data Warehouses, AWS S3, Google Cloud Storage, and Segment Data Lakes.

Storage Destinations offers valuable insights into event data, facilitating deeper analysis and quality assurance efforts. With a full record of events at your disposal, you can identify trends, troubleshoot issues, and derive actionable insights to optimize your data strategy.

Data Warehouses, in particular, are a pivotal component of data management strategy. Acting as a centralized repository for data collected from various sources, Data Warehouses facilitate structured data storage and efficient querying for analytical purposes.

When selecting a data warehouse, consider factors such as the type of data collected, the number of data sources, and intended data usage. Examples of data warehouses include Amazon Redshift, Google BigQuery, and Postgres, each offering distinct advantages for data analytics.

Segment's data loading process into warehouses involves two key steps:

Ping: Segment servers establish connections with the warehouse, conducting necessary queries to ensure synchronization. Sync failures may result from blocked VPN/IP, warehouse accessibility issues, or permission discrepancies.

Load: Transformed data is deduplicated and loaded into the warehouse. Post-loading, any configured queries are executed, facilitating further data analysis and insight generation.

Data observability and DevOps share a common goal of improving system reliability and performance through increased visibility and proactive problem-solving. In DevOps, observability helps data teams monitor and troubleshoot operational issues in software development and deployment processes.

Similarly, data observability applies these principles to data management, enabling teams to ensure high-quality data, data reliability, and timely delivery within their IT systems. It's a natural extension of DevOps practices into data management, emphasizing the importance of agility, collaboration, and continuous improvement in both domains.

While both data observability and data monitoring aim to ensure the health and performance of data systems, they differ in scope and approach.

Data monitoring involves tracking predefined metrics and logs to identify when things go wrong, focusing on known issues. It's reactive, alerting teams to problems after they occur.

On the other hand, data observability provides a more holistic view of the data system's health, enabling teams to understand and diagnose issues deeply. It encompasses monitoring but allows teams to explore data in real time, identify unknown issues, and understand their systems' internal state. Observability includes using metrics, logs, and traces to offer insights into data quality, pipeline performance, and more, facilitating a proactive approach to data management.

Twilio Segment offers tools for better data observability:

Workspace: Easily monitor data, spot audits, errors, and violations.

Public API: Integrates metrics into tools for proactive management.

Event delivery dashboard: Real-time insights on delivery performance and errors.

Protocols: Identifies and resolves event issues quickly.

Source Debugger: Verifies API calls in real-time for swift troubleshooting.

Storage Destinations: Provides raw data access for deeper analysis, especially through Data Warehouses.