Data Lake Architecture: Key Features & How to Implement

Learn the key components of a data lake architecture.

Learn the key components of a data lake architecture.

Data lakes are repositories that are able to store large volumes of unprocessed data, whether it’s structured, semi-structured, or unstructured. Unlike data warehouses, data lakes have no predefined schema, which means data can be stored in its original format.

The popularity of data lakes is in large part due to this flexibility, especially in an age where data is being generated at an exponential rate in a wide variety of formats (e.g., videos, log sensors, images, text, etc.).

And underneath every high-performing data lake lies a sturdy architecture that allows for this ingestion, processing, and storage of that data.

Data lake architecture refers to the layers or zones inside a data lake that store and process data in its native form. As opposed to data warehouses, which use a hierarchical structure, data lakes are built on a flat architecture.

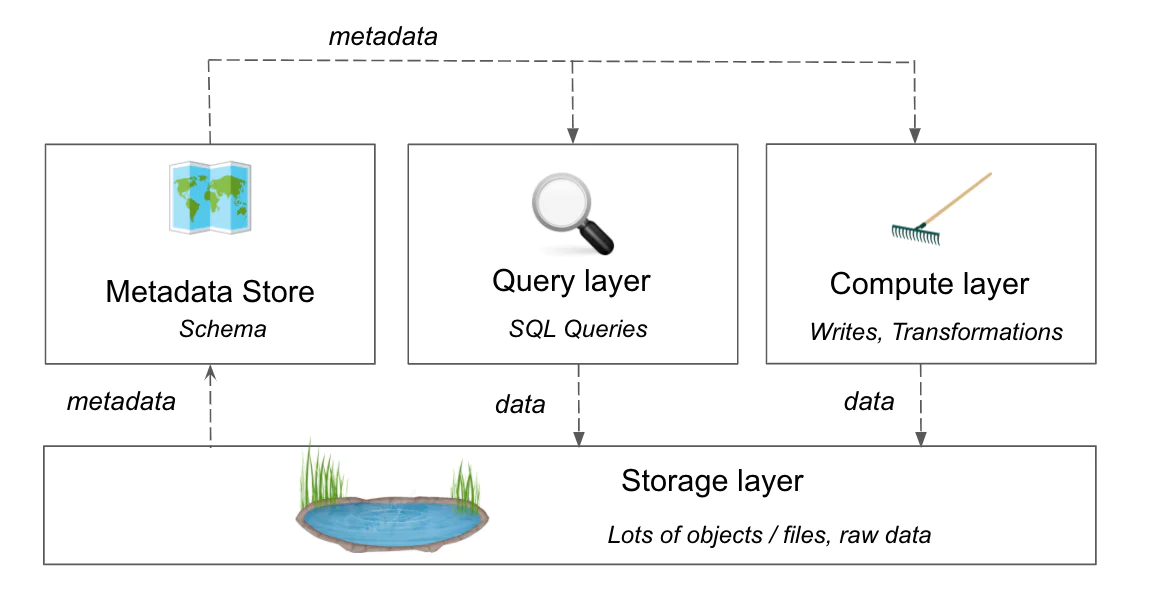

While the exact architecture varies from company to company, all data lakes have the capability to ingest data from multiple sources, store it in its natural format, and process it to support subsequent analytics. You’ll typically see four layers when referencing data lake architecture, which include the:

Storage layer

Metadata layer

Query layer

Computer layer

We describe these layers in more detail below.

While there is no set architecture for a data lake, the four listed below are typically considered to be staples of any data lake architecture.

The storage layer is where vast amounts of raw data can be stored in its original format. Since data lakes store all types of data, object storage has become a popular choice for data retrieval and management. (With object storage, data is treated as a distinct entity or self-contained unit, with each entity having its own metadata and a unique identifier.)

Popular cloud-based object storage services include Amazon S3 and Azure Data Lake Storage Gen2 (ADLS), which both have integrations with Segment.

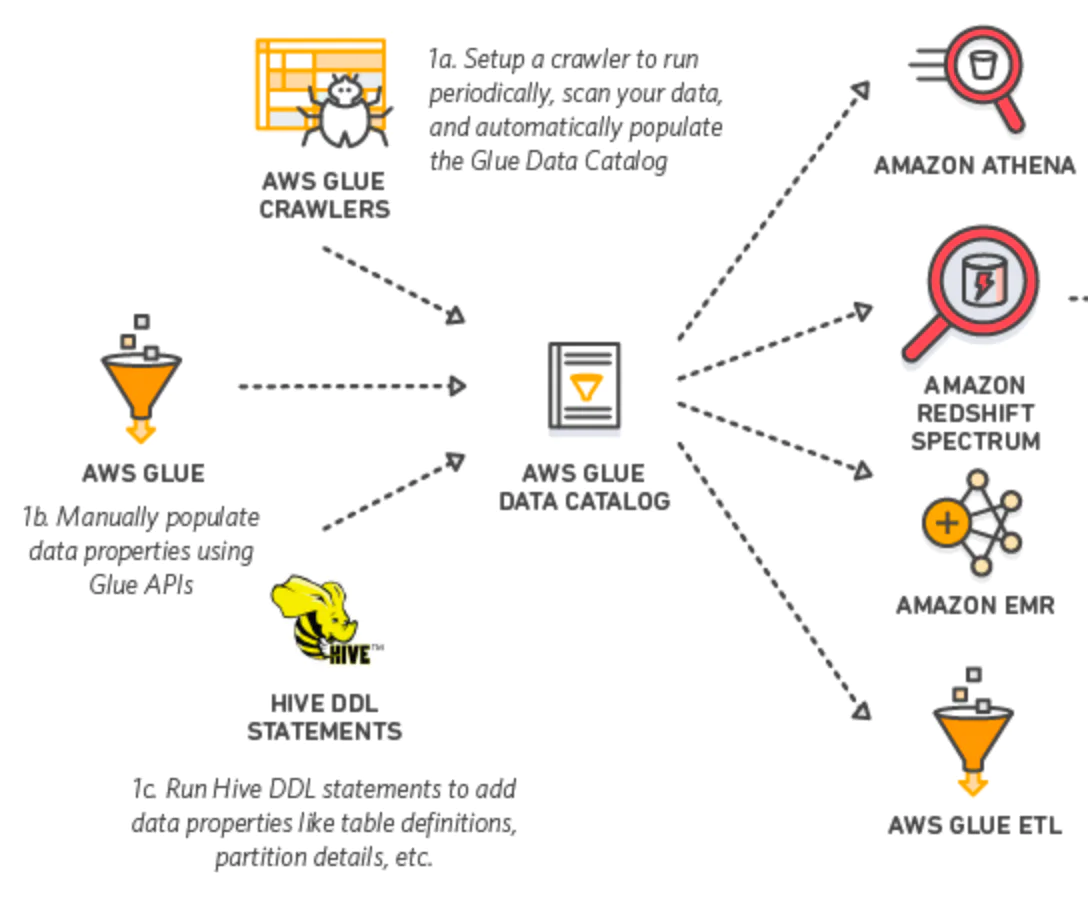

Metadata is data about data, providing important context and details like the data source, file format, data creation date, access permissions, and more.

The metadata store (also known as a metadata catalog) is a dedicated zone that manages and stores all the metadata associated with different objects or datasets in the data lake.

AWS Glue Catalog is a helpful tool for centrally storing metadata, schema details, and a record of all data transformations.

The query layer is where users are able to interact with and query data in the data lake (via SQL or noSQL). This layer is instrumental for analysis, and helps make data more accessible for reporting and business intelligence purposes.

One common example of a query engine used for data lakes is Amazon Athena, which works with many file formats for structured data like Parquet and JSON.

The compute layer is where you’re able to perform data transformations or modifications, ensuring data is easily accessible and primed for decision-making.

A compute layer will often include:

Data processing engines like Apache Spark or Hadoop, which facilitate the distributed processing of large datasets.

Integrations with various analytics and BI tools, along with the data catalog.

Optimization techniques to enhance performance, like parallel processing, caching, and indexing, especially when dealing with large-scale data.

Data transformation pipelines, to transform raw data into a suitable format for analysis, reporting, or storage.

Data lake architecture varies from one company to the next, but a well-designed data lake shares the following characteristics: scalability, flexibility, centralization, and efficient data ingestion.

Scalability refers to a data lake’s ability to expand and store a growing volume of data assets without impacting performance (e.g., a surge in data collection, like during a holiday season).

Flexibility refers to the ability to handle diverse types of data, evolve with changing data sources, and support different analytics workloads. A few characteristics of flexibility include:

Schema-on-read approach (i.e., data is ingested without a predefined schema)

A schema that can adapt and change over time

Supporting mixed workloads (e.g., real-time processing, batch processing, machine learning)

Supporting a range of data formats (like Parquet, JSON, and CSV)

And more!

Data lakes break down silos by pulling data from multiple sources into a centralized location. This in turn helps to eliminate blind spots or inconsistencies that might have otherwise occurred in reporting, and instead gain a complete picture of user behavior and business performance.

Your data lake architecture should be able to quickly and efficiently ingest different kinds of data. This includes real-time streaming data as well as bulk data assets. For a well-ordered data ingestion, you first need to understand how many data sources you’re going to connect your data lake to and the size of the data sets.

Before designing the architecture of your data lake, certain elements must be in place to ensure it performs well and isn’t vulnerable to security threats.

Developing a data lake architecture without data governance is akin to building a house without a blueprint. Data governance encompasses all the policies and practices that control how a company collects, stores, and uses its data. It establishes rules on handling sensitive information, preventing expensive regulatory violations.

A data governance framework will help your organization implement a concrete structure for managing data, including the roles and responsibilities of individual data stakeholders. The first step in developing a framework is to assign ownership to a person, such as a data architect, or a team that will establish data rules and standards.

Whether you’re hosting your data lake on-premise or in the cloud, you need strong security measures to minimize the risk of attackers getting hold of your data. Security applies to third parties who want to exploit your data as well as internal team members’ access to the data lake. Access controls will ensure that only authorized employees can access and modify the data in the lake.

Encrypting data assets will help prevent anyone who does gain unauthorized access from being able to read and use the data.

A data catalog contains information on the data assets stored in your data lake, and it’s essential to prevent your data lake from turning into a data swamp. It improves data trustworthiness with a shared glossary that explains the data terminology and facilitates the use of high-quality data across the organization.

Data catalogs also support self-service analytics, where business users can retrieve data without waiting for a data scientist or another member of the IT team to do it for them.

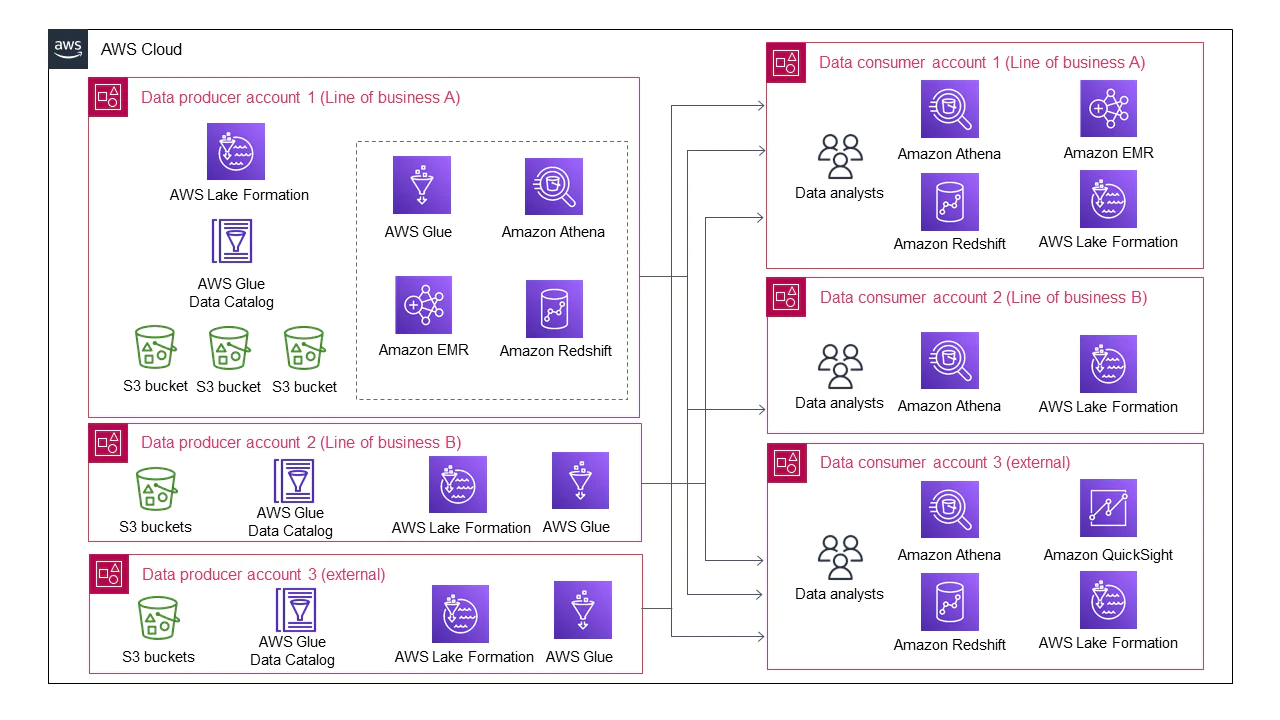

Scalability issues may also arise if you don’t design your data lake architecture to support a growing volume of data or data users. In the example below, Amazon Web Services illustrates how an unscalable architecture quickly becomes complex as soon as more than one data producer and data consumer joins the data lake.

Data silos are another potential challenge. If your data lake needs to ingest data from numerous systems, you will need to invest considerable resources into connecting these systems to your data lake. If this process doesn’t go smoothly, you might temporarily lose data access, creating bottlenecks that have a ripple effect on the organization.

Building a data lake in-house requires a time commitment of three months to a year. But organizations today have little time to spare when it comes to gleaning valuable consumer insights from their data. They need a way to accelerate the development of a data lake without creating scalability or performance issues.

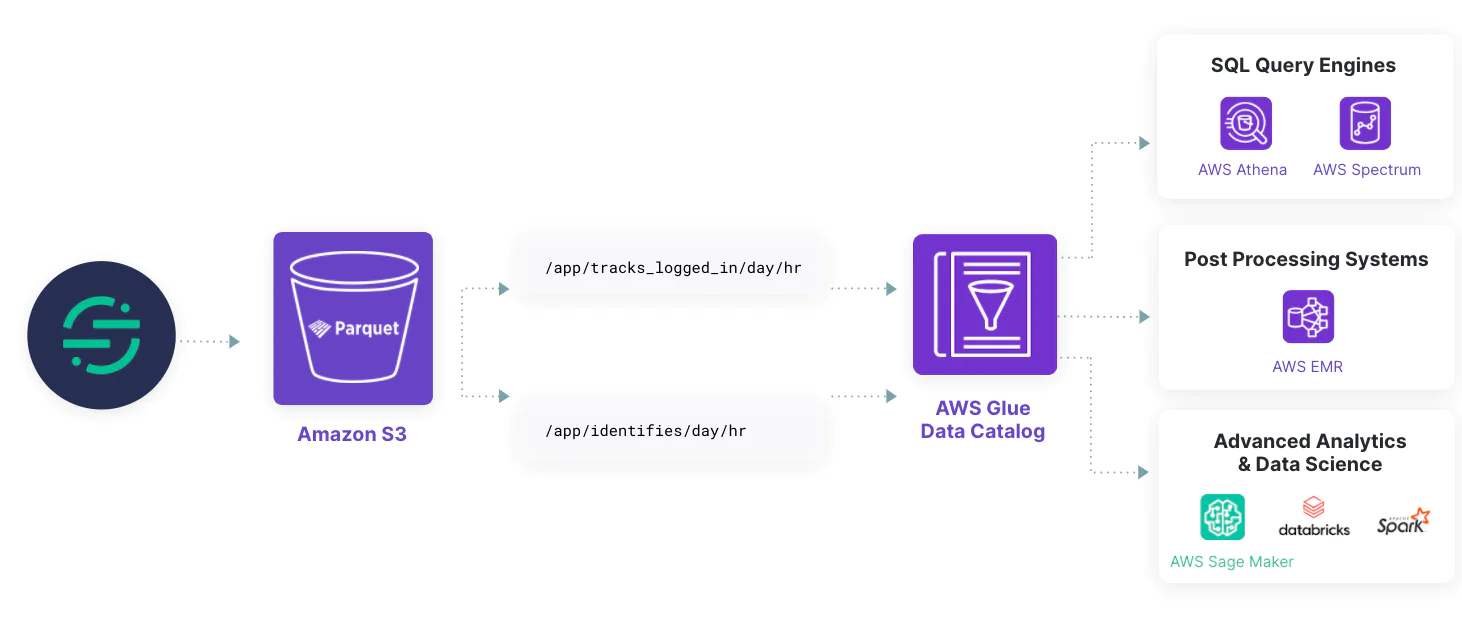

Segment Data Lakes provides a storage layer for schematized and optimized data alongside a metadata store, query layer, and compute layer. It allows organizations to quickly and easily deploy and currently supports AWS, Google Cloud, Microsoft Azure.

This out-of-the-box foundation helps significantly reduce the time spent on designing, building, and maintaining a custom data lake internally. There are also cost benefits involved with using Segment and a data store like Amazon S3. Take Rokfin, which was able to reduce data storage costs by 60% by using Segment Data Lakes.

Connect with a Segment expert who can share more about what Segment can do for you.

We'll get back to you shortly. For now, you can create your workspace by clicking below.

The benefits of a well-designed data lake architecture include the flexibility to store different types of data, scalability that supports a growing volume of data, and fast performance.

The key layers of a data lake include:

Storage layer

Metadata layer

Query layer

Computer layer

The most popular tools for building and implementing a data lake are Apache Hadoop, Apache Spark, AWS, Microsoft Azure, and Google Cloud.

A data lake uses a flat data storage architecture, while a data warehouse uses hierarchical tables to store its data.

Enter your email below and we’ll send lessons directly to you so you can learn at your own pace.